Chapter 6 tsvets package

6.1 Introduction

The vector exponential additive smoothing model (Vector ETS), introduced in De Silva, Hyndman, and Snyder (2010) naturally generalizes the univariate framework with a great deal of flexibility with the dynamics of the unobserved components. The dynamics of the states can be common, diagonal, grouped or fully parameterized, allowing for a rich set of patterns to be captured. The tsvets package implements a more general and flexible version based on Athanasopoulos and Silva (2012), with methods for estimation, inference, visualization, forecasting as well as aggregation. As of version 0.4.3, missing values are allowed in the series. This works by zeroing our the errors (i.e. taking the expectation) during the step update step for those series with missingness at time t, whilst the covariance and likelihood is calculated on the joint non-missingness data.

Let the vector of \(N\) series at time \(t\), \(\bf{y}_t\) be represented as a linear additive combination of their unobserved level, slope and seasonal (with frequency \(m\)) components, \(\bf{l}_{t-1}\), \(\bf{b}_{t-1}\) and \(\bf{s}_{t-m}\) respectively. Formally, the conditional mean of \(\bf{\hat y}_t\) is given by:

\[\begin{equation} \bf{\hat y}_t = \bf{l}_{t - 1} + \Phi\bf{b}_{t - 1} + \bf{s}_{t - m}, \tag{6.1} \end{equation}\]

where \(\Phi\) is the \(N\times N\) matrix of dampening parameters. In its reduced form, the model without seasonality is equivalent to a VARIMA(1,2,2) model,15 and \(\Phi\) becomes the matrix of first order autoregressive coefficients.

The 1 step ahead forecast errors, \(\boldsymbol{\varepsilon}_t\) follow a multivariate normal distribution:

\[\begin{equation} {\boldsymbol{\varepsilon} _t} = {\bf{y}_t} - {{\bf{\hat y}}_t},\quad {\boldsymbol{\varepsilon} _t} \sim {\bf{N}}\left( {{\bf{0}},\Sigma } \right). \tag{6.2} \end{equation}\]

The state equations have the following dynamics:

\[\begin{equation} \begin{array}{l} {\bf{l}_t} = {\bf{l}_{t - 1}} + \Phi {\bf{b}_{t - 1}} + {\bf{A}}{\boldsymbol{\varepsilon}_t},\\ {\bf{b}_t} = \Phi {\bf{b}_{t - 1}} + {\bf{B}}{\boldsymbol{\varepsilon}_t},\\ {\bf{s}_t} = {\bf{s}_{t - m}} + {G_1}K{\boldsymbol{\varepsilon}_t},\\ {\bf{s}_{t - m}} = {\bf{s}_{t - i}} + {G_2}K{\boldsymbol{\varepsilon}_t}, \end{array} \tag{6.3} \end{equation}\]

where the matrices \(\mathbf{A}\), \(\mathbf{B}\) and \(\mathbf{K}\) represent the adjustment of the vector components to the errors, and can be diagonal, fully parameterized or scalar (common adjustment).

In vector innovations state space form, the system can be written as follows:

\[\begin{equation} \begin{array}{l} {\bf{y}_t} = H{\bf{x}_{t - 1}} + {\boldsymbol{\varepsilon}_t},\\ {\bf{x}_t} = F{\bf{x}_{t - 1}} + GA{\boldsymbol{\varepsilon}_t} \end{array} \tag{6.4} \end{equation}\]

where the matrices \(H\), \(F\), \(G\) and \(A\) are composed as follows:

\[\begin{equation} \mathop H\limits_{\left( {m + 2} \right)N \times N} = \left[ {\begin{array}{*{20}{c}} {{I_N}}\\ {{I_N}}\\ {{0_{N \times N}}}\\ \vdots \\ {{0_{N \times N}}}\\ {{I_N}} \end{array}} \right],\quad \mathop A\limits_{\left( {m + 2} \right)N \times N} = \left[ {\begin{array}{*{20}{c}} {\bf{A}}\\ {\bf{B}}\\ {\bf{K}}\\ \vdots \\ \vdots \\ {\bf{K}} \end{array}} \right], \tag{6.5} \end{equation}\]

\[\begin{equation} \mathop F\limits_{\left( {m + 2} \right)N \times \left( {m + 2} \right)N} = \left[ {\begin{array}{*{20}{c}} {{I_N}}&{{I_N}}&{{0_{N \times mN}}}\\ {{0_{N \times N}}}&{{\Phi _N}}&{{0_{N \times mN}}}\\ {{0_{mN \times N}}}&{{0_{mN \times N}}}&{\tilde{F} \otimes {I_N}} \end{array}} \right],\mathop {\tilde{F}}\limits_{m \times m} = \left[ {\begin{array}{*{20}{c}} 0&0&0& \cdots &0&1\\ 1&0&0& \cdots &0&0\\ 0&1&0& \cdots &0&0\\ \vdots & \vdots & \vdots & \ddots & \vdots & \vdots \\ 0&0&0& \cdots &1&0 \end{array}} \right], \tag{6.6} \end{equation}\]

\[\begin{equation} \mathop G\limits_{\left( {m + 2} \right)N \times \left( {m + 2} \right)N} = \left[ {\begin{array}{*{20}{c}} {{I_N}}&{{0_{N \times N}}}&{{0_{N \times mN}}}\\ {{0_{N \times N}}}&{{I_N}}&{{0_{N \times mN}}}\\ {{0_{mN \times N}}}&{{0_{mN \times N}}}&{\tilde{G} \otimes {I_N}} \end{array}} \right], \tag{6.7} \end{equation}\]

\[\begin{equation} \mathop {\tilde{G}}\limits_{m \times m} = \left[ {\begin{array}{*{20}{c}} {\frac{{m - 1}}{m}}&0& \cdots &0&0\\ 0&{ - \frac{1}{m}}& \cdots &0&0\\ 0&0&{ - \frac{1}{m}}&0&0\\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0&0&0& \cdots &{ - \frac{1}{m}} \end{array}} \right], \tag{6.8} \end{equation}\]

\[\begin{equation} \mathop {{\bf{x}_t}}\limits_{\left( {m + 2} \right)N \times N} = \left[ {\begin{array}{*{20}{c}} {{\bf{l}_t}}\\ {{\bf{b}_t}}\\ {{\bf{s}_t}}\\ \vdots \\ {{\bf{s}_{t - m + 2}}}\\ {{\bf{s}_{t - m + 1}}} \end{array}} \right]. \tag{6.9} \end{equation}\]

The values in the seasonal matrix \(\tilde{G}\) represent normalization terms which ensure that the seasonal component adds to zero throughout the updating process without becoming contaminated by the level component.

6.2 Inclusion of External Regressors

We augment the model with the ability to include external regressors (\(z_t\)) such that:

\[\begin{equation} \begin{array}{l} {\bf{y}_t} = H{\bf{x}_{t - 1}} + \mathbf{W}\bf{z}_t + {\boldsymbol{\varepsilon}_t},\\ {\bf{x}_t} = F{\bf{x}_{t - 1}} + GA{\boldsymbol{\varepsilon}_t}, \end{array} \tag{6.10} \end{equation}\]

where the \(W\) is the matrix of coefficients which are time invariant. The xreg_include argument in the vets_modelspec function is a design matrix which allows one to define which coefficients should be set to zero, which should be estimated individually as well as which should be pooled (see the documentation for more details). The index on \(\bf{z}\) is \(t\) and not \(t-1\) and it is left to the user to pre-lag any regressors passed to the function.

6.3 Log-Likelihood

The log-likelihood (\(L\)) of the model follows from the assumption that the innovations \(\boldsymbol{\varepsilon_t}\sim N\left(\bf{0}, \Sigma\right)\), leading to the following function:

\[\begin{equation} L\left(\boldsymbol{\theta} \right) = \frac{T}{2}\left( {N\log \left( {2\pi } \right) + N\log \left| \Sigma \right|} \right) - \frac{1}{2}\sum\limits_{t = 1}^T {\boldsymbol{\varepsilon_t}{\Sigma ^{ - 1}}\boldsymbol{\varepsilon_t}}, \tag{6.11} \end{equation}\]

where \(\boldsymbol{\theta}\) is the vector of parameters being optimized. We also concentrate out the parameters of the covariance matrix by using its ML estimator

\[\begin{equation} \hat \Sigma = \frac{1}{T}\sum\limits_{t = 1}^T {{\boldsymbol{\varepsilon}_t}{{\boldsymbol{\varepsilon}'}_t}}. \tag{6.12} \end{equation}\]

Therefore, the vector ETS log-likelihood is proportional to

\[\begin{equation} L\left(\boldsymbol{\theta} \right) \propto \sum\limits_{t = 1}^T {{{\boldsymbol{\varepsilon}'}_t}{{\hat \Sigma }^{ - 1}}{\boldsymbol{\varepsilon}_t}}, \tag{6.13} \end{equation}\]

which requires looping through each \(t\) to calculate the quadratic form which is expensive. Instead, we can make use of the following relationship, assuming positive-definite or positive-semi-definite \(\hat \Sigma\), \({\hat\Sigma ^{-1}} = Q\Lambda^{-1} Q'\), where \(\Lambda\) is the diagonal matrix of eigenvalues of \(\hat\Sigma\). We then have \({\varepsilon _t} = Q{\bf{u}_t}\) where \(\bf{u}_t\) are the projections onto the eigenvectors \(Q\). The negative of the log-likelihood can be represented as:

\[\begin{equation} L\left( \boldsymbol{\theta} \right) = \frac{1}{2}T\left( {N\log 2\pi - \log \left| {\hat \Sigma } \right|} \right) + \frac{1}{2}{{{\bf{1'}}}_T}\left( {{{\left( {\varepsilon Q} \right)}^2}\frac{1}{\lambda }} \right), \tag{6.14} \end{equation}\]

where we have used the relation \(Q^{-1} = Q'\) due to \(\hat{\Sigma}\) being symmetric (and thus \(Q\) is orthogonal), and \(1 / \lambda\) is a vector containing the reporicals of the eigenvalues.

Additionally, we constrain the \(N+1\) largest eigenvalues (\(\lambda_s\)) to be less than 1 to ensure invertibility of the system, such that

\[\begin{equation} {\lambda _s}\left( D \right) < 1,D = F - GAH. \tag{6.15} \end{equation}\]

This is added as a soft barrier constraint. The diagonal elements of the level, slope, dampening and seasonal matrices are bounded between 0 and 1, while the off diagonal elements are allowed to vary between -1 and 1.16

Finally, the initial seed values for each of the states are approximated using the heuristic approach from the univariate ETS model as described in Section 4.5.2.

6.4 Dependence Structure

While Equation (5.10) assumes a full covariance matrix, allowing contemporaneous associations among the residuals, we also offer 3 additional estimators for the dependence structure: diagonal covariance, equicorrelation and shrinkage covariance based on the estimator of Ledoit and Wolf (2004).

6.4.1 Diagonal Covariance

The diagonal covariance matrix is the one used by Athanasopoulos and Silva (2012), and leads to the fastest estimation. In this case, the log-likelihood is greatly simplified and equal to

\[\begin{equation} \frac{T}{2}\left( {N\log \left( {2\pi } \right) + N\log \left| \Sigma \right|} \right) - \frac{1}{2}\sum\limits_{t = 1}^T {\sum\limits_{i = 1}^N {\varepsilon _{it}^2/\sigma _i^2} }. \tag{6.16} \end{equation}\]

6.4.2 Equicorrelation

The equicorrelation covariance assumes that the correlation across all series is set to some common value \(\rho\). The correlation matrix \(\bf{R}\) can be calculated as

\[\begin{equation} {\bf{R}} = \rho {\bf{11'}} + \left( {1 - \rho } \right){\bf{I}}, \tag{6.17} \end{equation}\]

which is guaranteed to be positive definite as long as \(-\frac{1}{{N - 1}}<\rho< 1\). The covariance is then equal to:

\[\begin{equation} {\Sigma} = {\bf{DRD'}}, \tag{6.18} \end{equation}\]

where \({\bf{D}} = diag\left( {{\hat \sigma _1},\dots,{\hat \sigma _n}} \right)\). Some of the advantages of assuming equicorrelation are discussed in Clements, Scott, and Silvennoinen (2015).

6.4.3 Shrinkage Covariance

The shrinkage estimator of Ledoit and Wolf (2004) follows from the observation that the eigenvalues of the estimated correlations tend to be more dispersed than the eigenvalues of the true data generating process. The shrinkage estimator of the covariance is based on a convex combination of the sample covariance \(\hat\Sigma\) and a target covariance set to a multiple of the identity matrix. It is this combination weight \(\rho\) which we estimate in the case of the shrinkage estimator

\[\begin{equation} {\Sigma} = \left( {1 - \rho } \right)\Sigma + \frac{\rho }{n}tr\left( \Sigma \right){\bf{I}}. \tag{6.18} \end{equation}\]

6.5 Grouping and Pooling

The tsvets package allows both global pooling of state component coefficients as well as group-wise pooling. For instance, if we had a large-dimensional system composed of series which have some common grouping structure (e.g. geographical, feature or statistical based), we could impose that these groups have common dynamics for some or all of the components. We provide an example of this in the demonstration section.

6.6 Homogeneous Coefficients and Aggregation

When a model is estimated with all components pooled (i.e. common coefficients), then we can aggregate the model to obtain an aggregated representation in closed form, following Section 17.1.2 of R. Hyndman et al. (2008). When this is not the case, we can still obtain an aggregated series from the estimation and prediction objects. This functionality is implemented via the tsaggregate method and we provide an example in the demonstration section.

6.7 Demonstration

6.7.1 Specification

The specification function defines the entry point for setting up a vets model:

suppressWarnings(suppressPackageStartupMessages(library(tsvets)))

args(vets_modelspec)## function (y, level = c("constant", "diagonal", "common", "full",

## "grouped"), slope = c("none", "constant", "common", "diagonal",

## "full", "grouped"), damped = c("none", "common", "diagonal",

## "full", "grouped"), seasonal = c("none", "common", "diagonal",

## "full", "grouped"), group = NULL, xreg = NULL, xreg_include = NULL,

## frequency = 1, transformation = "box-cox", lambda = NULL,

## lower = 0, upper = 1, dependence = c("diagonal", "full",

## "equicorrelation", "shrinkage"))

## NULLThe specification has options for how the components of level, slope, dampening, seasonality are structured as well as the type of dependence to use. We also allow multicore processing since we require the initial state vectors to be calculated through calls to the tsets package, which can be done in parallel. The lambda argument can be set either to NA, in which case the multivariate version of the Box Cox transformation is used which targets a transformation to multivariate normality based on Velilla (1993) using the powerTransform function from the car package of Fox, Weisberg, and Price (2022), a vector of length equal to the number of series of a single number to apply to all series, and NULL in which case no transformation is performed.

The next sections provide fully worked examples with methods showcasing the functionality of the package under different assumptions.

6.7.2 Example: Australian Retail Sales

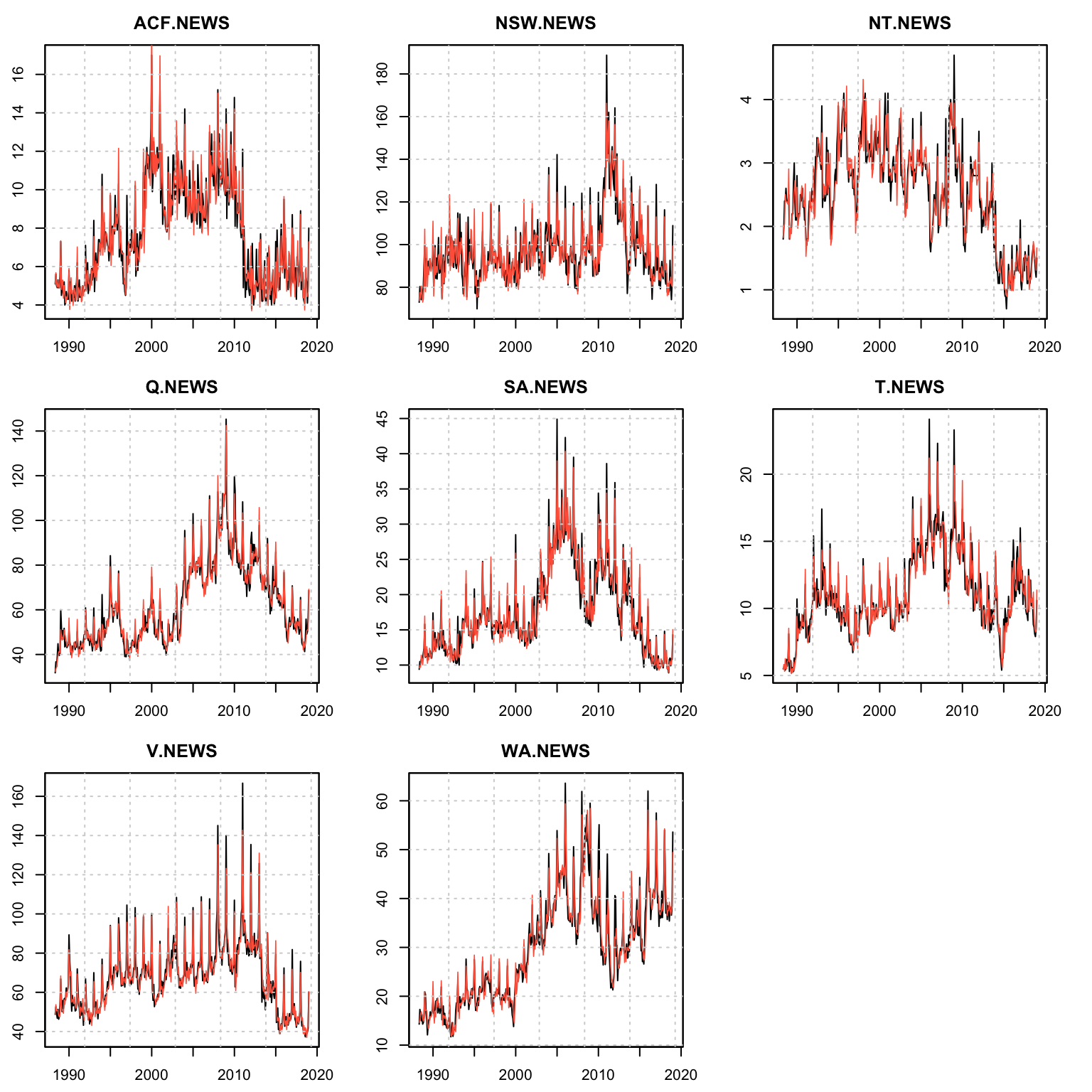

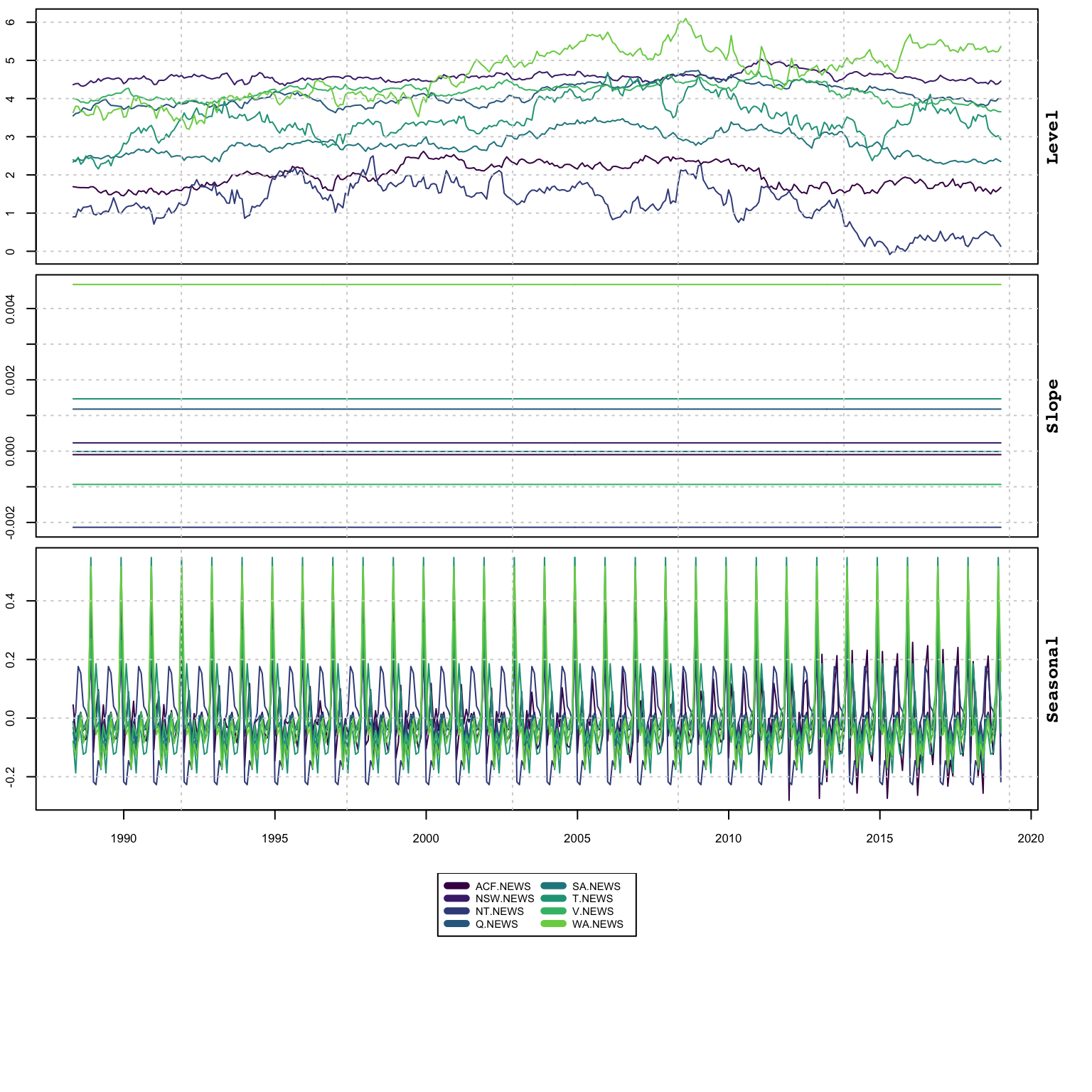

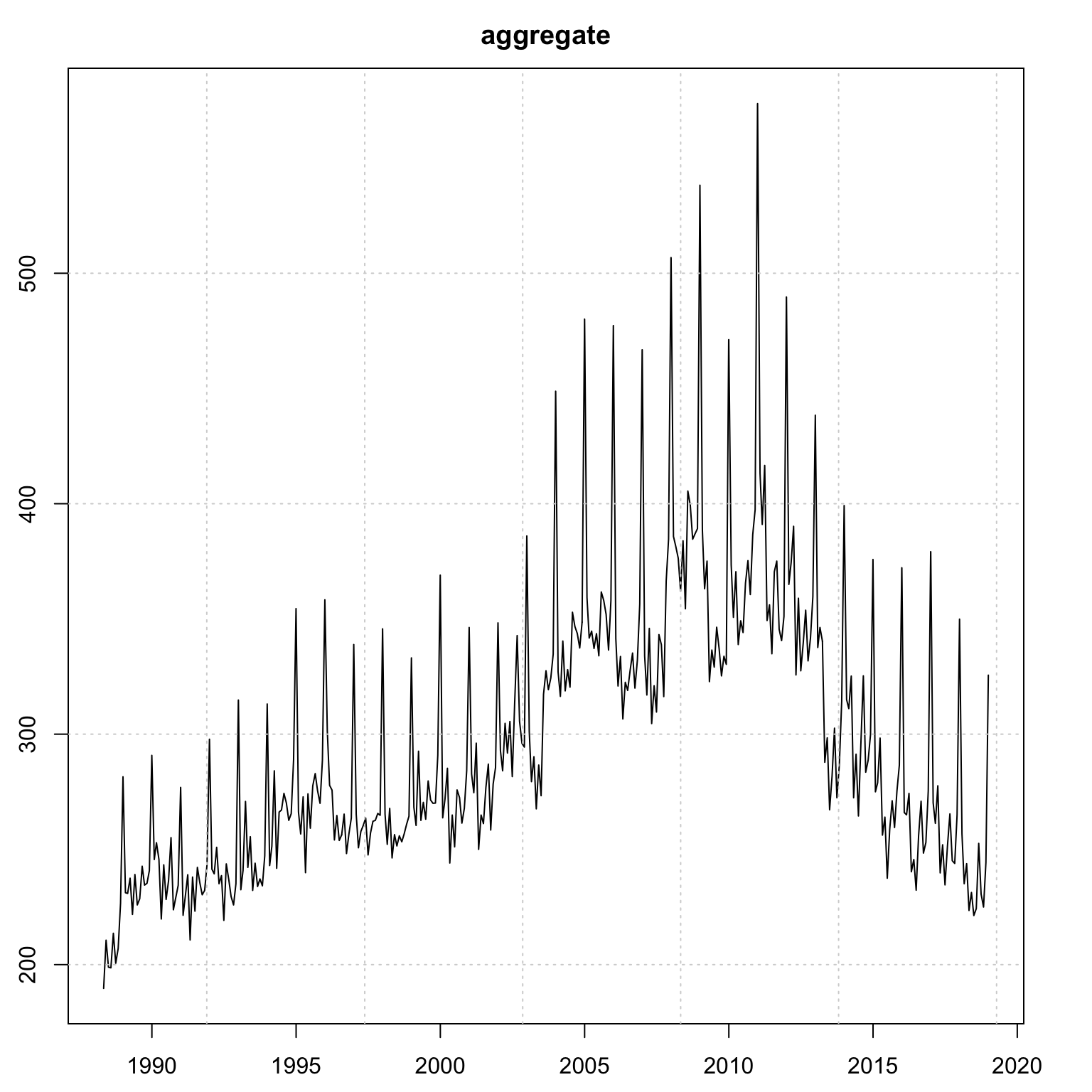

We use a subset of the Australian retail dataset from package tsdatasets representing the monthly retail turnover in $Million AUD across different regions for the news vendor category, with common level, constant slope and diagonal seasonal and dependence structure.

suppressWarnings(suppressPackageStartupMessages(library(tsvets)))

suppressMessages(library(tsmethods))

suppressMessages(library(tsaux))

suppressMessages(library(xts))

data("austretail", package = "tsdatasets")

y <- na.omit(austretail[,grepl("NEWS", colnames(austretail))])

spec <- vets_modelspec(y, level = "common", slope = "constant", damped = "none",

seasonal = "diagonal", lambda = NA, dependence = "diagonal",

frequency = 12)

mod <- estimate(spec, solver = "solnp", control = list(trace = 0))The joint estimation of the 8 series takes about 0.6130779 seconds. The summary object prints the full matrices for each component using the Matrix package of Bates, Maechler, and Jagan (2022). The summary method also take an optional weights argument which is used to calculate the weighted Accuracy Criteria, and when this is NULL, an equal weight vector is used instead (and hence equivalent to the Mean Criteria).

Similar to other packages, there is a diagnostics method tsdiagnose which prints the eiganvalues of the \(D\) matrix as well as the output from a multivariate Normality Test and Multivariate Outliers based on the mvn function of the MVN package of Korkmaz, Goksuluk, and Zararsiz (2021).

summary(mod)##

## Vector ETS

## -----------------------------------

## Level : common

## Slope : constant

## Seasonal : diagonal

## Dependence : diagonal

## No. Series : 8

## No. TimePoints : 369

##

## Parameter Matrices

##

## Level Matrix

## 8 x 8 diagonal matrix of class "ddiMatrix"

## [,1] [,2] [,3] [,4] [,5] [,6] [,7]

## [1,] 0.7237793 . . . . . .

## [2,] . 0.7237793 . . . . .

## [3,] . . 0.7237793 . . . .

## [4,] . . . 0.7237793 . . .

## [5,] . . . . 0.7237793 . .

## [6,] . . . . . 0.7237793 .

## [7,] . . . . . . 0.7237793

## [8,] . . . . . . .

## [,8]

## [1,] .

## [2,] .

## [3,] .

## [4,] .

## [5,] .

## [6,] .

## [7,] .

## [8,] 0.7237793

##

## Slope Matrix

## 8 x 8 diagonal matrix of class "ddiMatrix"

## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8]

## [1,] 0 . . . . . . .

## [2,] . 0 . . . . . .

## [3,] . . 0 . . . . .

## [4,] . . . 0 . . . .

## [5,] . . . . 0 . . .

## [6,] . . . . . 0 . .

## [7,] . . . . . . 0 .

## [8,] . . . . . . . 0

##

## Seasonal Matrix

## 8 x 8 diagonal matrix of class "ddiMatrix"

## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0.2884931 . . . . .

## [2,] . 2.396104e-09 . . . .

## [3,] . . 3.553894e-09 . . .

## [4,] . . . 6.317263e-09 . .

## [5,] . . . . 4.995968e-09 .

## [6,] . . . . . 6.078841e-09

## [7,] . . . . . .

## [8,] . . . . . .

## [,7] [,8]

## [1,] . .

## [2,] . .

## [3,] . .

## [4,] . .

## [5,] . .

## [6,] . .

## [7,] 0.1520137 .

## [8,] . 3.009261e-09

##

## Correlation Matrix

## 8 x 8 diagonal matrix of class "ddiMatrix"

## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS SA.NEWS T.NEWS V.NEWS WA.NEWS

## ACF.NEWS 1 . . . . . . .

## NSW.NEWS . 1 . . . . . .

## NT.NEWS . . 1 . . . . .

## Q.NEWS . . . 1 . . . .

## SA.NEWS . . . . 1 . . .

## T.NEWS . . . . . 1 . .

## V.NEWS . . . . . . 1 .

## WA.NEWS . . . . . . . 1

##

## Information Criteria

## AIC BIC AICc

## 1536.99 2261.81 1547.42

##

## Accuracy Criteria

## Mean Weighted

## MAPE 0.0613 0.0613

## MSLRE 0.0067 0.0067Additional methods are similar to what is available in other packages.

Coefficients:

coef(mod)## Level[Common] Seasonal[ACF.NEWS] Seasonal[NSW.NEWS] Seasonal[NT.NEWS]

## 7.237793e-01 2.884931e-01 2.396104e-09 3.553894e-09

## Seasonal[Q.NEWS] Seasonal[SA.NEWS] Seasonal[T.NEWS] Seasonal[V.NEWS]

## 6.317263e-09 4.995968e-09 6.078841e-09 1.520137e-01

## Seasonal[WA.NEWS]

## 3.009261e-09Loglikelihood and AIC:

logLik(mod)## 'log Lik.' 1294.991 (df=121)AIC(mod)## [1] 1536.991Performance metrics with optional weights option:

tsmetrics(mod, weights = runif(8))## N no_pars LogLik AIC BIC AICc MAPE MSLRE

## 1 2952 121 1294.991 1536.991 2261.81 1547.424 0.06131029 0.00673102

## WAPE WSLRE

## 1 0.06051585 0.006598354tscor(mod)## 8 x 8 diagonal matrix of class "ddiMatrix"

## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS SA.NEWS T.NEWS V.NEWS WA.NEWS

## ACF.NEWS 1 . . . . . . .

## NSW.NEWS . 1 . . . . . .

## NT.NEWS . . 1 . . . . .

## Q.NEWS . . . 1 . . . .

## SA.NEWS . . . . 1 . . .

## T.NEWS . . . . . 1 . .

## V.NEWS . . . . . . 1 .

## WA.NEWS . . . . . . . 1Correlation and covariance matrices:

tscov(mod)## 8 x 8 diagonal matrix of class "ddiMatrix"

## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS SA.NEWS T.NEWS

## ACF.NEWS 0.0089999 . . . . .

## NSW.NEWS . 0.003625678 . . . .

## NT.NEWS . . 0.03950474 . . .

## Q.NEWS . . . 0.004023731 . .

## SA.NEWS . . . . 0.006397141 .

## T.NEWS . . . . . 0.03014232

## V.NEWS . . . . . .

## WA.NEWS . . . . . .

## V.NEWS WA.NEWS

## ACF.NEWS . .

## NSW.NEWS . .

## NT.NEWS . .

## Q.NEWS . .

## SA.NEWS . .

## T.NEWS . .

## V.NEWS 0.004275613 .

## WA.NEWS . 0.02804386tscor(mod)## 8 x 8 diagonal matrix of class "ddiMatrix"

## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS SA.NEWS T.NEWS V.NEWS WA.NEWS

## ACF.NEWS 1 . . . . . . .

## NSW.NEWS . 1 . . . . . .

## NT.NEWS . . 1 . . . . .

## Q.NEWS . . . 1 . . . .

## SA.NEWS . . . . 1 . . .

## T.NEWS . . . . . 1 . .

## V.NEWS . . . . . . 1 .

## WA.NEWS . . . . . . . 1Note that, even though we impose a diagonal covariance matrix structure, we still return the full covariance matrix in tscov.

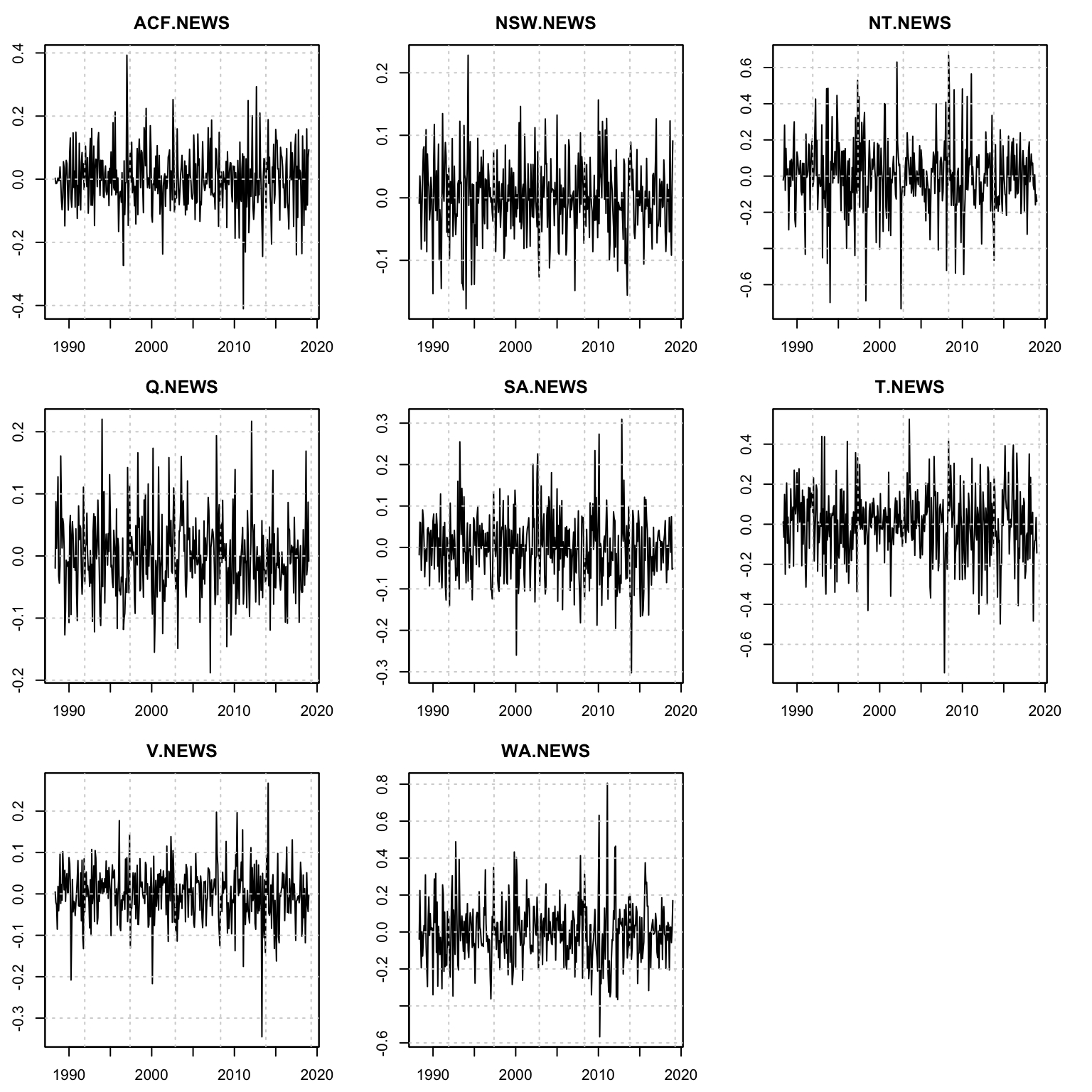

Fitted and residuals:

head(fitted(mod)[,1:4], 4)## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS

## 1988-04-30 5.083160 73.64485 1.826273 32.42631

## 1988-05-31 5.678305 77.54145 1.992810 33.63348

## 1988-06-30 5.068541 74.72022 2.038204 34.26928

## 1988-07-31 4.932006 80.55169 2.504371 36.89154head(residuals(mod)[,1:4], 4)## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS

## 1988-04-30 0.01683990 -0.6448491 -0.026272664 -0.6263074

## 1988-05-31 -0.07830457 2.7585476 0.007190382 3.0665182

## 1988-06-30 -0.06854076 0.5797782 0.361795877 0.4307202

## 1988-07-31 -0.03200619 -6.3516878 -0.104371326 2.2084582head(residuals(mod, raw = TRUE)[,1:4], 4)## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS

## 1988-04-30 0.003307404 -0.008794762 -0.021775194 -0.01950376

## 1988-05-31 -0.013886100 0.034956958 0.005781138 0.08725470

## 1988-06-30 -0.013615046 0.007729372 0.281470559 0.01249037

## 1988-07-31 -0.006510636 -0.082134913 -0.078646140 0.05814016where argument raw denotes the non backtransformed residuals (if a Box Cox calculation was used).

States decomposition:

tsx <- tsdecompose(mod)

head(tsx$Level[,1:4], 4)## Level[ACF.NEWS] Level[NSW.NEWS] Level[NT.NEWS] Level[Q.NEWS]

## 1988-04-30 1.691734 4.364045 0.9000652 3.546509

## 1988-05-31 1.681585 4.389578 0.9021136 3.610841

## 1988-06-30 1.671633 4.395405 1.1037003 3.621061

## 1988-07-31 1.666822 4.336189 1.0446420 3.664320head(tsx$Slope[,1:4], 4)## Slope[ACF.NEWS] Slope[NSW.NEWS] Slope[NT.NEWS] Slope[Q.NEWS]

## 1988-04-30 -9.831044e-05 0.0002321412 -0.002135869 0.001179101

## 1988-05-31 -9.831044e-05 0.0002321412 -0.002135869 0.001179101

## 1988-06-30 -9.831044e-05 0.0002321412 -0.002135869 0.001179101

## 1988-07-31 -9.831044e-05 0.0002321412 -0.002135869 0.001179101head(tsx$Seasonal[,1:4], 4)## Seasonal[ACF.NEWS] Seasonal[NSW.NEWS] Seasonal[NT.NEWS]

## 1988-04-30 0.045016743 -0.013464562 -0.01673148

## 1988-05-31 -0.058434214 -0.076059727 0.01760856

## 1988-06-30 -0.075788732 -0.006737928 0.17613856

## 1988-07-31 -0.002813842 0.020157397 0.15300637

## Seasonal[Q.NEWS]

## 1988-04-30 -0.032166030

## 1988-05-31 -0.077771015

## 1988-06-30 -0.014257399

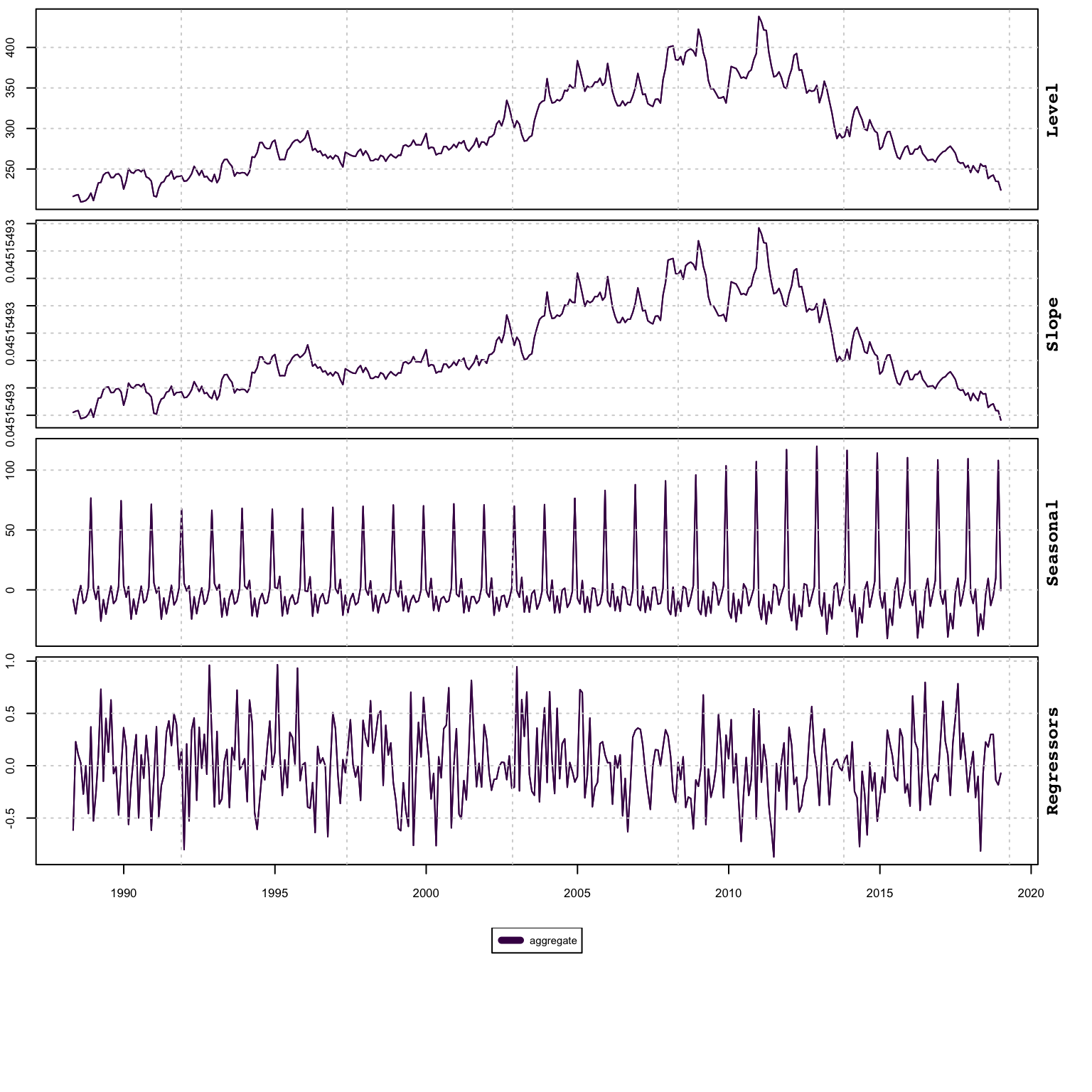

## 1988-07-31 0.009366033Plot methods exist outputting the fitted values, the states and the residuals, with an argument for the series to output, with a maximum number per call of 10 series:

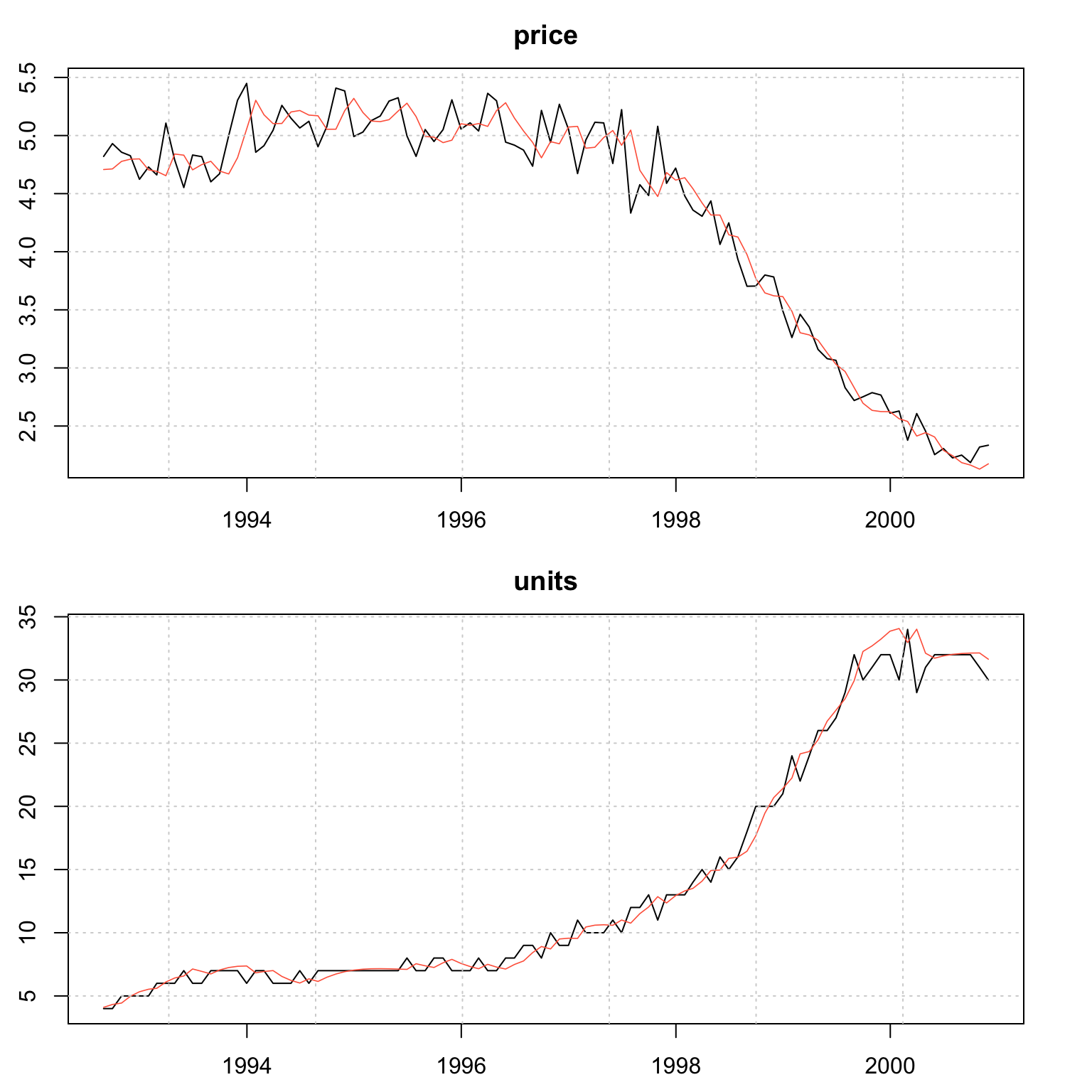

plot(mod)

plot(mod, type = "states")

plot(mod, type = "residuals")

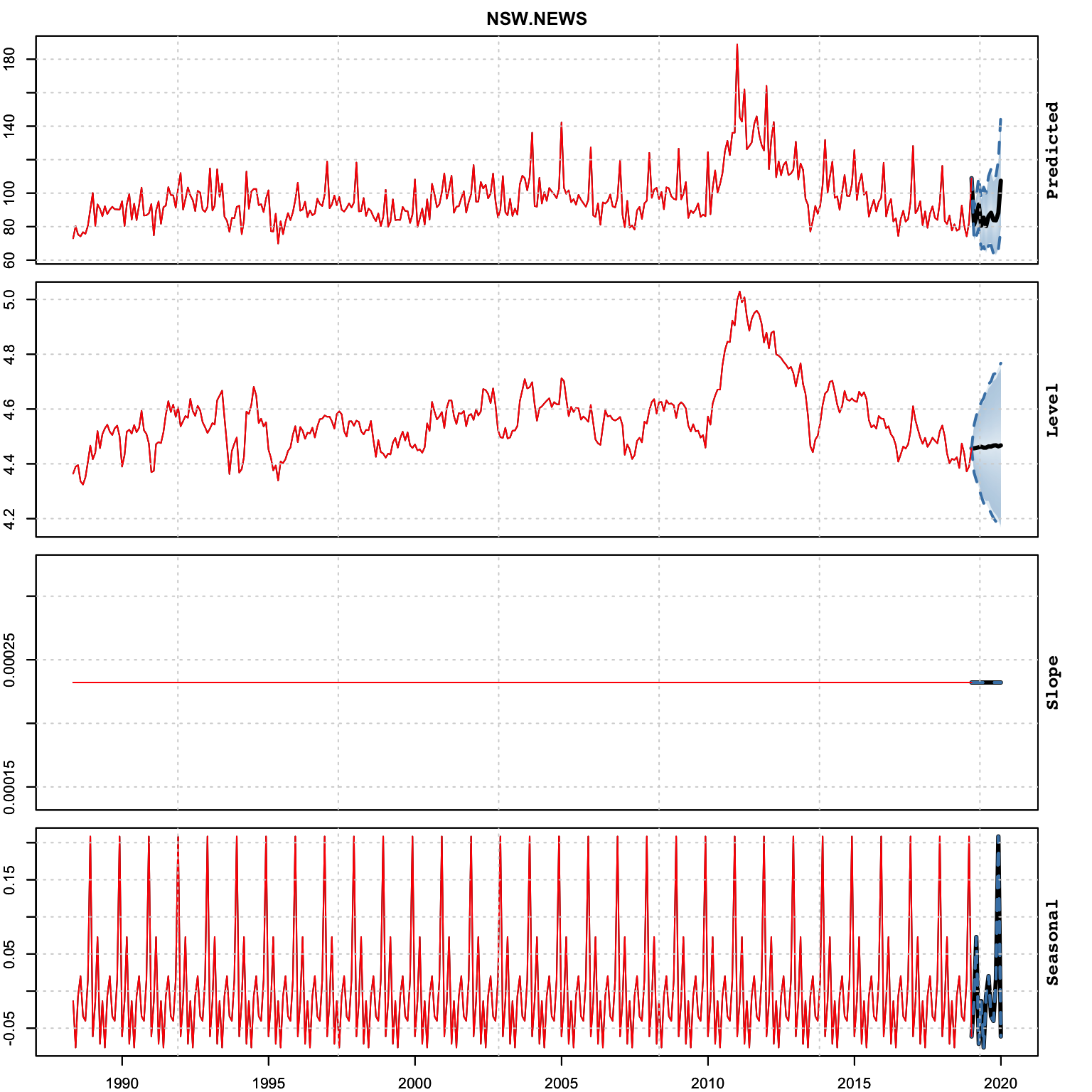

The predict function will output a list which includes data.table of each series’ prediction object and state decomposition:

p <- predict(mod, h = 12)

p$prediction_table## series Level Slope Seasonal

## <char> <list> <list> <list>

## 1: ACF.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 2: NSW.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 3: NT.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 4: Q.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 5: SA.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 6: T.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 7: V.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 8: WA.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## X Error Predicted

## <list> <list> <list>

## 1: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 2: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 3: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 4: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 5: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 6: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 7: <tsmodel.predict[2]> <tsmodel.predict[2]>

## 8: <tsmodel.predict[2]> <tsmodel.predict[2]>The plot method on the predicted object can output 1 series at a time using the series argument:

plot(p, series = 2)

The simulation method also returns a list with a data.table object with each series’ simulation object and state decomposition. Additionally, there are optional arguments for the initial state to use (init_states) and parameter vector (pars).

s <- simulate(mod, h = 12*8, nsim = 100, init_states = mod$spec$vets_env$States[,1])

s$simulation_table## series Level Slope Seasonal

## <char> <list> <list> <list>

## 1: ACF.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 2: NSW.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 3: NT.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 4: Q.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 5: SA.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 6: T.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 7: V.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## 8: WA.NEWS <tsmodel.predict[2]> <tsmodel.predict[2]> <tsmodel.predict[2]>

## Simulated

## <list>

## 1: <tsmodel.predict[2]>

## 2: <tsmodel.predict[2]>

## 3: <tsmodel.predict[2]>

## 4: <tsmodel.predict[2]>

## 5: <tsmodel.predict[2]>

## 6: <tsmodel.predict[2]>

## 7: <tsmodel.predict[2]>

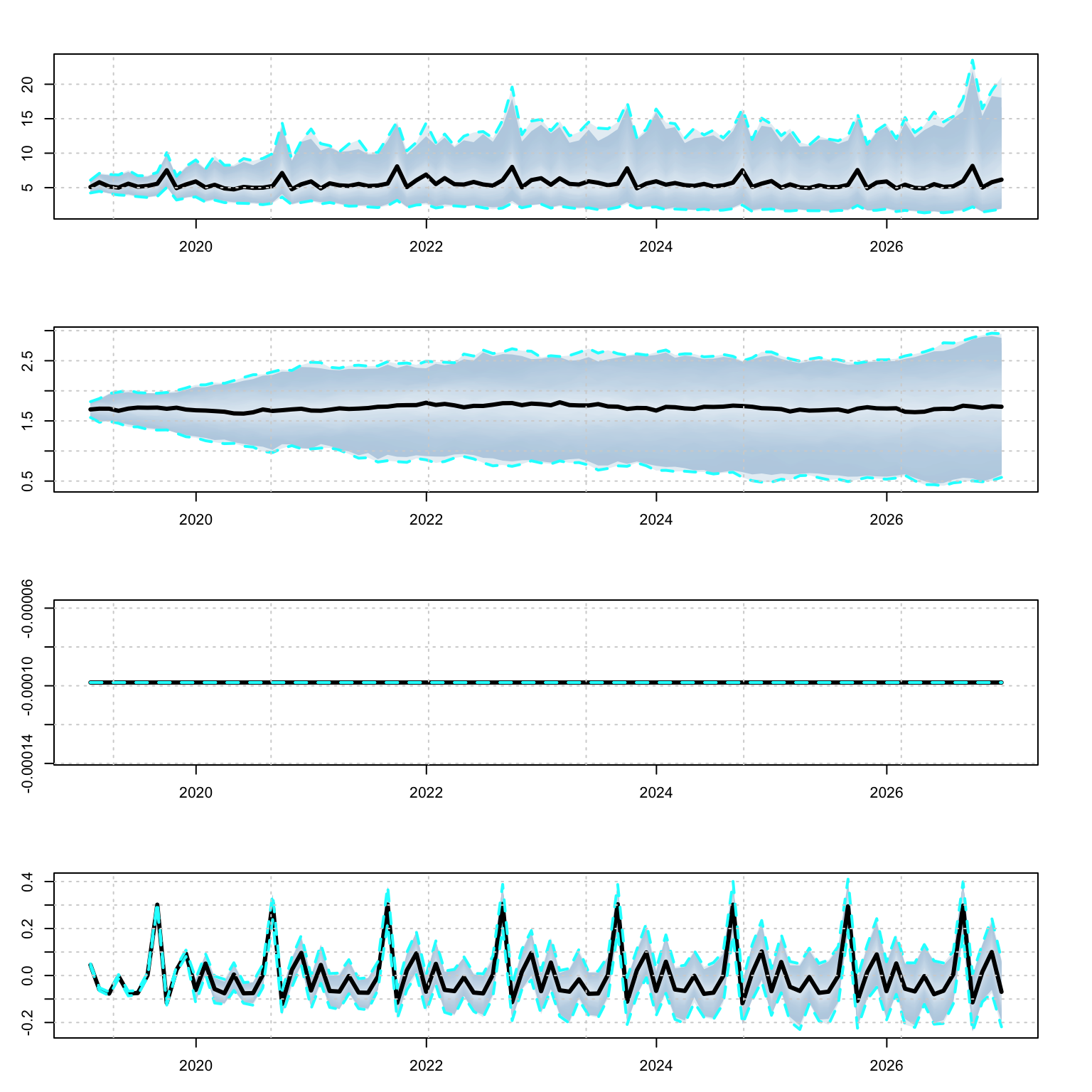

## 8: <tsmodel.predict[2]>par(mfrow = c(4,1),mar = c(3,3,3,3))

plot(s$simulation_table[series == "ACF.NEWS"]$Simulated[[1]], main = "Simulated Series")

plot(s$simulation_table[series == "ACF.NEWS"]$Level[[1]], main = "Simulated Level")

plot(s$simulation_table[series == "ACF.NEWS"]$Slope[[1]], main = "Simulated Slope")

plot(s$simulation_table[series == "ACF.NEWS"]$Seasonal[[1]], main = "Simulated Seasonal")

The tsbacktest method has similar arguments to other backtest implementations in the tsmodels framework:

suppressMessages(library(future))

plan(list(

tweak(sequential),

tweak(multisession, workers = 5)

))

b <- tsbacktest(spec, h = 12, solver = "nlminb", trace = FALSE, autodiff = TRUE)

plan(sequential)The returned object is a list of 2 data.tables with the predictions for each series by estimation date and horizon and the summary metrics table:

head(b$prediction)## series estimation_date horizon size forecast_dates forecast actual

## <char> <Date> <int> <int> <char> <num> <num>

## 1: ACF.NEWS 2003-07-31 1 184 2003-08-31 11.22847 11.2

## 2: ACF.NEWS 2003-07-31 2 184 2003-09-30 10.64990 10.1

## 3: ACF.NEWS 2003-07-31 3 184 2003-10-31 10.57616 9.7

## 4: ACF.NEWS 2003-07-31 4 184 2003-11-30 11.29816 9.7

## 5: ACF.NEWS 2003-07-31 5 184 2003-12-31 15.65695 14.2

## 6: ACF.NEWS 2003-07-31 6 184 2004-01-31 10.19482 8.6head(b$metrics)## series horizon MAPE MSLRE BIAS n

## <char> <int> <num> <num> <num> <int>

## 1: ACF.NEWS 1 0.08299767 0.01147201 0.01607784 185

## 2: ACF.NEWS 2 0.09826851 0.01485794 0.02508737 184

## 3: ACF.NEWS 3 0.10889976 0.01775767 0.03540852 183

## 4: ACF.NEWS 4 0.11984134 0.02163159 0.04479359 182

## 5: ACF.NEWS 5 0.11957098 0.02159764 0.05274425 181

## 6: ACF.NEWS 6 0.12708012 0.02327741 0.06098494 1806.7.3 Example: Australian Retail Sales Grouped Dynamics

In this example, we show how grouping works on 99 series, where grouping is by sector.

suppressWarnings(suppressPackageStartupMessages(library(tsvets)))

suppressMessages(library(tsmethods))

suppressMessages(library(tsaux))

suppressMessages(library(xts))

suppressMessages(library(data.table))

suppressMessages(library(future))

plan(list(

tweak(sequential),

tweak(multisession, workers = 5)

))

data("austretail", package = "tsdatasets")

y <- austretail["2000/"]

include <- sapply(1:ncol(y), function(i) all(!is.na(y[,i])))

y <- y[,include]

groups <- sapply(1:ncol(y), function(i) strsplit(colnames(y[,i]), "\\.")[[1]][2])

groups_index <- sort.int(groups, index.return = TRUE)

y <- y[,groups_index$ix]

groups <- groups[groups_index$ix]

groups <- rle(groups)$lengths

groups <- unlist(sapply(1:length(groups), function(i) rep(i, groups[i])))

spec <- vets_modelspec(y[,1:99], level = "grouped", slope = "grouped", damped = "none",

group = groups[1:99], seasonal = "grouped", lambda = 0.5,

dependence = "diagonal", frequency = 12)

mod <- estimate(spec, solver = "nlminb")

plan(sequential)The joint estimation of the 99 series takes about 36.7841386 minutes.

We avoid the summary method for such a large object, and instead print out the coefficients and the performance metrics:

cf <- coef(mod)

print(data.table::data.table(Coefficient = names(cf), Value = round(cf,4)))## Coefficient Value

## <char> <num>

## 1: Level[Group = 1] 0.4452

## 2: Level[Group = 2] 0.5126

## 3: Level[Group = 3] 0.7164

## 4: Level[Group = 4] 0.6882

## 5: Level[Group = 5] 0.1651

## 6: Level[Group = 6] 0.5261

## 7: Level[Group = 7] 0.5494

## 8: Level[Group = 8] 0.4238

## 9: Level[Group = 9] 0.5011

## 10: Level[Group = 10] 0.5850

## 11: Level[Group = 11] 0.7356

## 12: Level[Group = 12] 0.4934

## 13: Level[Group = 13] 0.7173

## 14: Slope[Group = 1] 0.0000

## 15: Slope[Group = 2] 0.0000

## 16: Slope[Group = 3] 0.0000

## 17: Slope[Group = 4] 0.0000

## 18: Slope[Group = 5] 0.0067

## 19: Slope[Group = 6] 0.0000

## 20: Slope[Group = 7] 0.0000

## 21: Slope[Group = 8] 0.0000

## 22: Slope[Group = 9] 0.0000

## 23: Slope[Group = 10] 0.0061

## 24: Slope[Group = 11] 0.0000

## 25: Slope[Group = 12] 0.0000

## 26: Slope[Group = 13] 0.0000

## 27: Seasonal[Group = 1] 0.3373

## 28: Seasonal[Group = 2] 0.2047

## 29: Seasonal[Group = 3] 0.0000

## 30: Seasonal[Group = 4] 0.0000

## 31: Seasonal[Group = 5] 0.1315

## 32: Seasonal[Group = 6] 0.2795

## 33: Seasonal[Group = 7] 0.0000

## 34: Seasonal[Group = 8] 0.3143

## 35: Seasonal[Group = 9] 0.3885

## 36: Seasonal[Group = 10] 0.0000

## 37: Seasonal[Group = 11] 0.0000

## 38: Seasonal[Group = 12] 0.2692

## 39: Seasonal[Group = 13] 0.0013

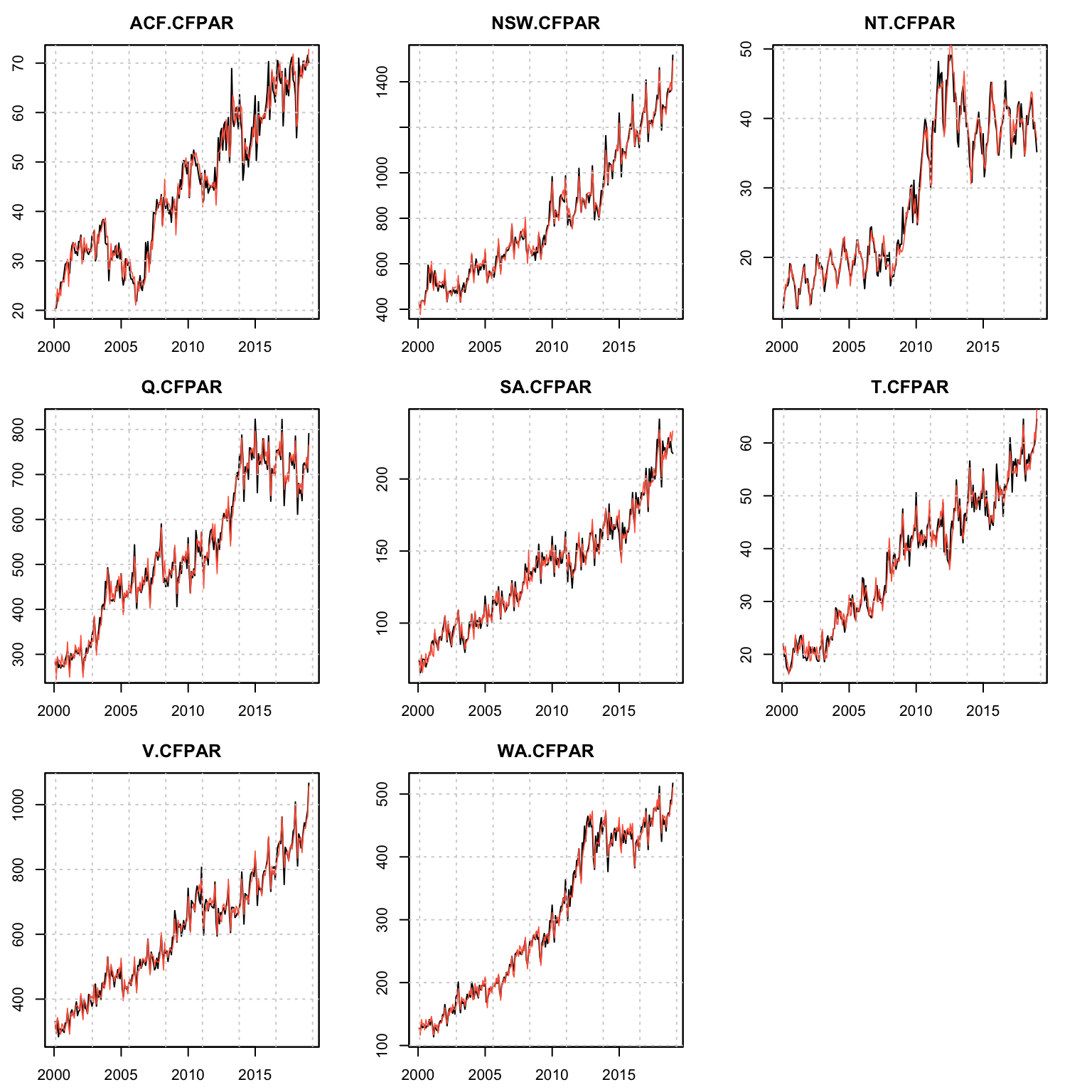

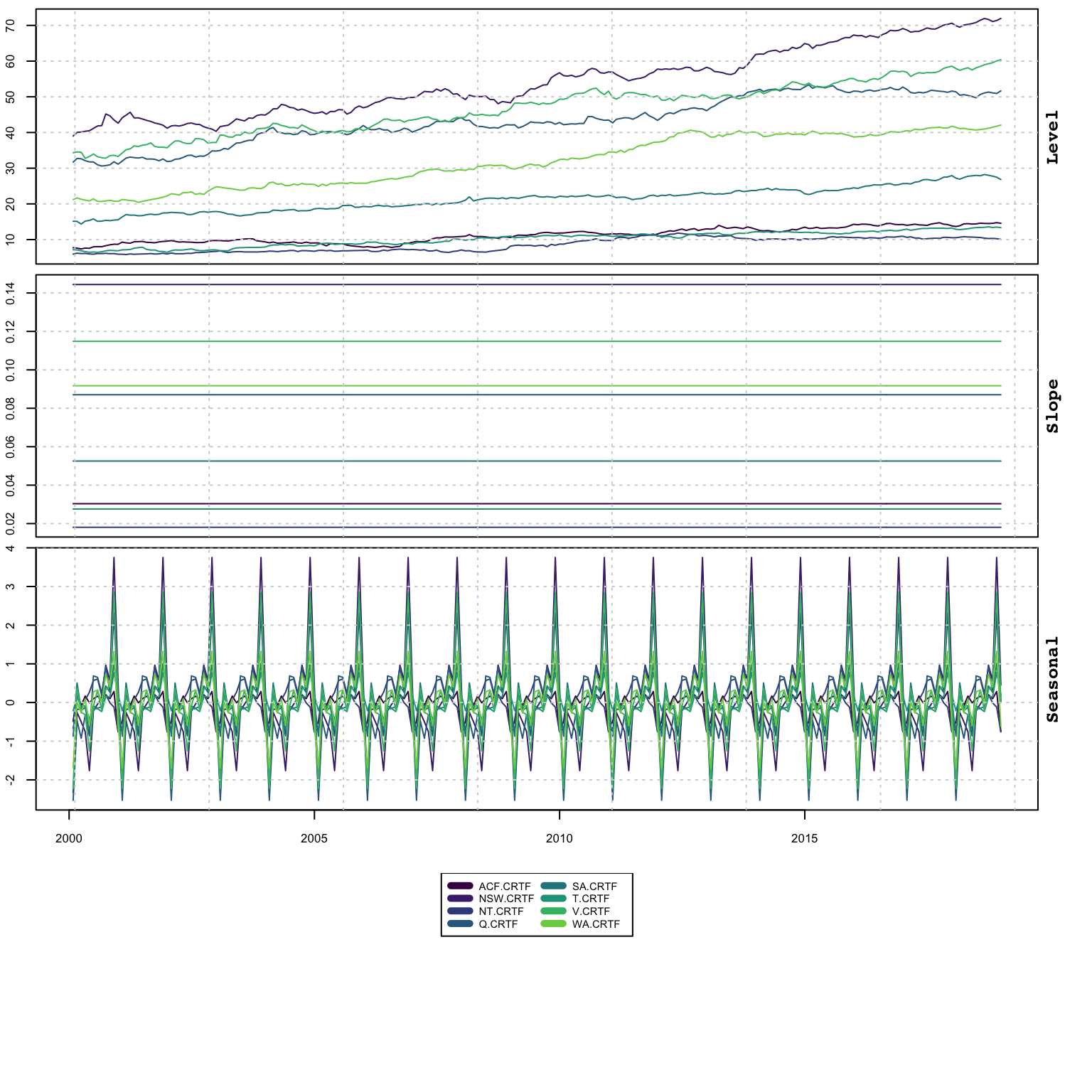

## Coefficient Valuetsmetrics(mod)## N no_pars LogLik AIC BIC AICc MAPE MSLRE

## 1 22572 1425 -7613.512 -4763.512 6671.351 -4571.32 0.04063859 0.003203836

## WAPE WSLRE

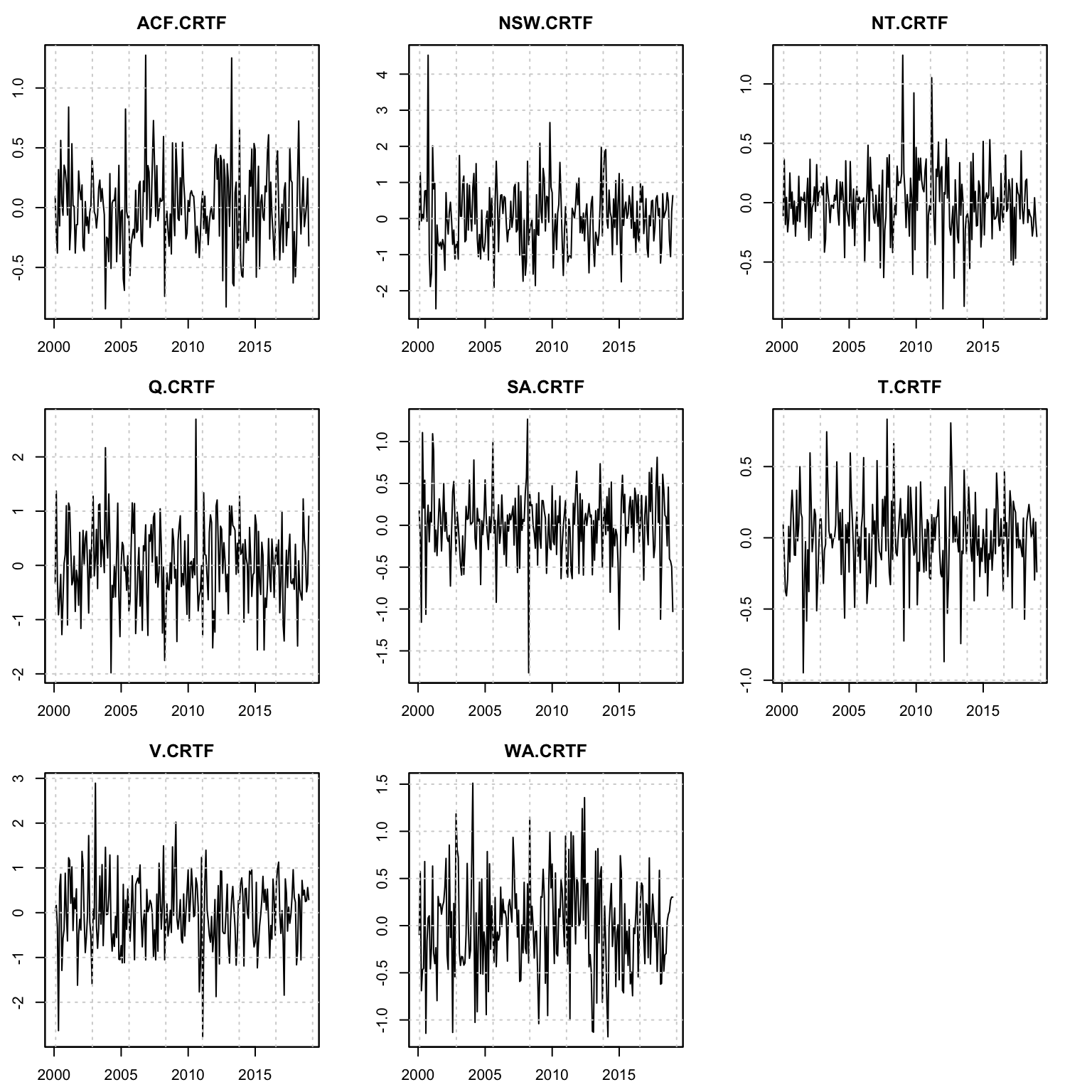

## 1 0.04063859 0.003203836plot(mod, series = (1:99)[groups == 4])

plot(mod, series = (1:99)[groups == 4], type = "states")

plot(mod, series = (1:99)[groups == 4], type = "residuals")

6.7.4 Example: Australian Retail Sales Filtering

This example highlights the use of the tsfilter method for online filtering

as already discussed in Sections 4.6.4 and 5.5.5.

suppressWarnings(suppressPackageStartupMessages(library(tsvets)))

suppressMessages(library(tsmethods))

suppressMessages(library(tsaux))

suppressMessages(library(xts))

data("austretail", package = "tsdatasets")

y <- na.omit(austretail[,grepl("NEWS", colnames(austretail))])

y_train <- y[1:(369 - 24)]

y_test <- y[(369 - 24 + 1):369]

spec <- vets_modelspec(y_train, level = "common", slope = "diagonal", damped = "none",

seasonal = "common", lambda = 0.5, dependence = "diagonal",

frequency = 12)

mod <- estimate(spec, solver = "nlminb")

filt <- tsfilter(mod, y = y_test)

tail(fitted(mod))## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS SA.NEWS T.NEWS V.NEWS

## 2016-07-31 6.274802 85.48929 1.563078 51.79401 12.263423 12.41307 47.63892

## 2016-08-31 5.822149 87.91927 1.518185 54.59904 10.952639 12.97214 47.66849

## 2016-09-30 4.902062 81.27385 1.394669 51.86892 9.940538 13.71905 45.84748

## 2016-10-31 4.791341 83.27201 1.319689 55.07794 9.960859 12.42058 49.48286

## 2016-11-30 5.547111 86.93625 1.357322 54.95787 10.368365 12.07245 50.89754

## 2016-12-31 8.184714 116.27851 1.858932 72.01650 15.622342 14.69097 76.20901

## WA.NEWS

## 2016-07-31 40.75596

## 2016-08-31 42.52751

## 2016-09-30 39.24565

## 2016-10-31 40.45786

## 2016-11-30 41.79131

## 2016-12-31 55.58459head(tail(fitted(filt), 24 + 1), 5)## ACF.NEWS NSW.NEWS NT.NEWS Q.NEWS SA.NEWS T.NEWS V.NEWS

## 2016-12-31 8.184714 116.27851 1.858932 72.01650 15.622342 14.69097 76.20901

## 2017-01-31 5.136207 92.07696 1.239745 57.96096 8.790413 12.48530 47.68477

## 2017-02-28 7.150196 94.62244 1.098936 52.06101 9.564020 13.50578 47.71324

## 2017-03-31 5.671755 98.44475 1.105181 54.21634 11.124740 11.63927 52.55807

## 2017-04-30 4.718627 82.17766 1.158383 51.48423 8.319089 10.94583 47.45609

## WA.NEWS

## 2016-12-31 55.58459

## 2017-01-31 48.38624

## 2017-02-28 40.52982

## 2017-03-31 40.46546

## 2017-04-30 37.865866.7.5 Example: Australian Retail Sales Aggregation

This demonstrates the ability to aggregate a model estimated with homogeneous coefficients. We continue with the retail sales data, which being a set of flow variables, can be aggregated.

suppressWarnings(suppressPackageStartupMessages(library(tsvets)))

suppressMessages(library(tsmethods))

suppressMessages(library(tsaux))

suppressMessages(library(xts))

data("austretail", package = "tsdatasets")

y <- na.omit(austretail[,grepl("NEWS", colnames(austretail))])We use a vector of ones as weights, to denote summation to the total for the News category.

weights <- rep(1, 8)Additionally, we also include, purely for expositional purposes, a matrix of 5 randomly generated regressors:

xreg <- xts(matrix(rnorm(nrow(y) * 5), nrow = nrow(y), ncol = 5), index(y))

xreg_include <- matrix(2, ncol = 5, nrow = ncol(y))

xreg_include[2,1] <- 1Setting the include matrix to the value of 2 means pooling, and we also set regressor 1 for series 2 to a value of 1 in order to denote that we want this to be estimated seperately (non-pooled).

spec <- vets_modelspec(y, level = "common", slope = "common", seasonal = "common",

dependence = "diagonal", frequency = 12, xreg = xreg,

xreg_include = xreg_include)

mod <- estimate(spec, solver = "nlminb", control = list(trace = 0))We can extract specific matrices from the estimate object using the as yet unexported function p_matrix:

tsvets:::p_matrix(mod)$X## 8 x 5 Matrix of class "dgeMatrix"

## x1 x2 x3 x4 x5

## ACF.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## NSW.NEWS -0.35119906 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## NT.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## Q.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## SA.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## T.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## V.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726

## WA.NEWS 0.01394335 -0.01667844 -0.0268727 -0.0004924587 -0.01555726where we can observe that the coefficients for each regressor have been pooled, with the exception of x1 for the NSW.NEWS series.

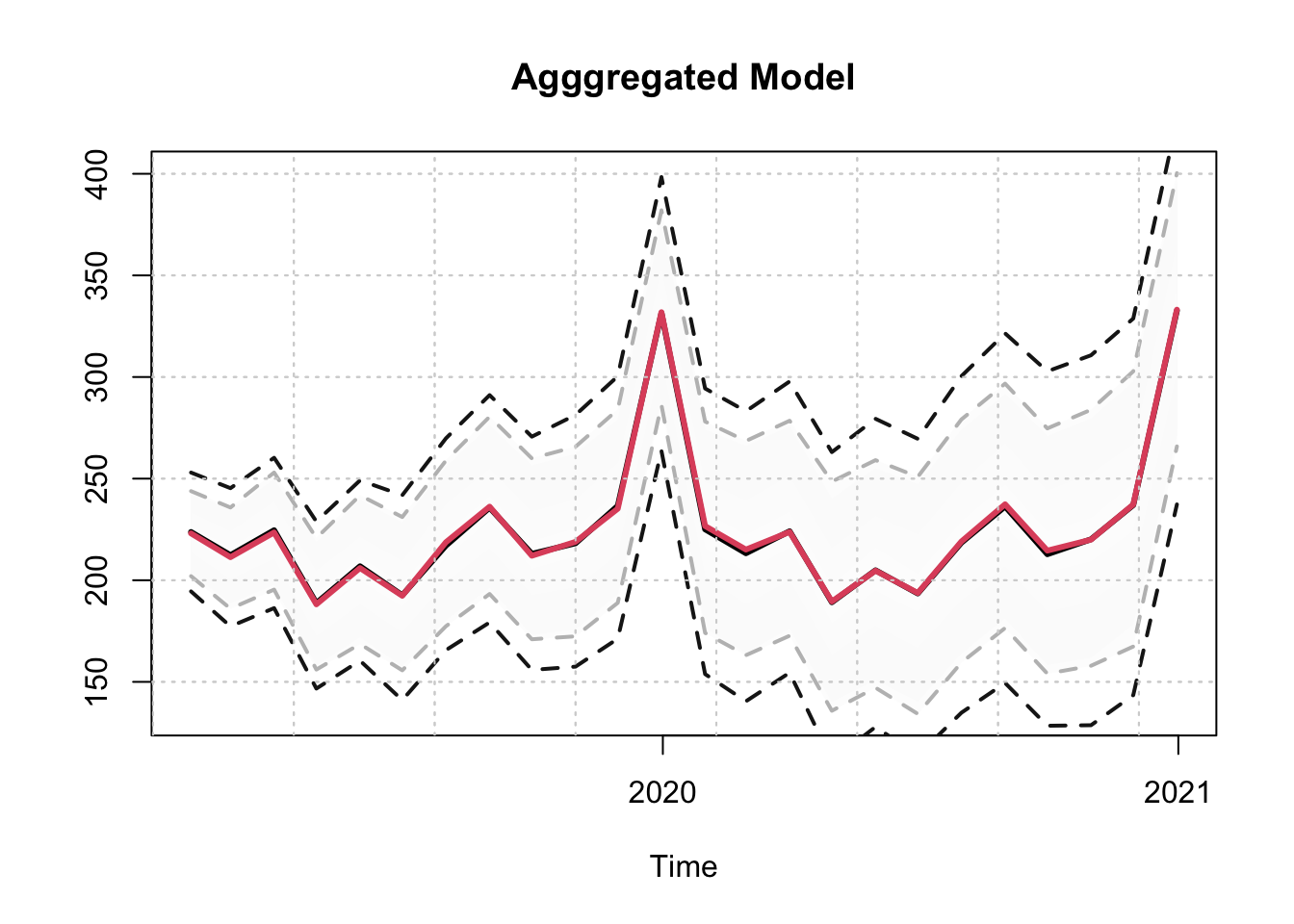

We can now proceed to aggregate the model using the tsaggregate method on the estimated object:

mod_aggregate <- tsaggregate(mod, weights = weights, return_model = TRUE)

summary(mod_aggregate)##

## Vector ETS

## -----------------------------------

## Level : common

## Slope : common

## Seasonal : common

## Dependence : diagonal

## No. Series : 1

## No. TimePoints : 369

##

## Parameter Matrices

##

## Level Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## [,1]

## [1,] 0.6224697

##

## Slope Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## [,1]

## [1,] 1e-12

##

## Seasonal Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## [,1]

## [1,] 0.1257502

##

## Regressor Matrix

## 1 x 5 Matrix of class "dgeMatrix"

## x1 x2 x3 x4 x5

## aggregate -0.2535956 -0.1334275 -0.2149816 -0.003939669 -0.1244581

##

## Covariance Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## aggregate

## aggregate 222.3031

##

## Accuracy Criteria

## Mean Weighted

## MAPE 0.0352 0.0352

## MSLRE 0.0021 0.0021plot(mod_aggregate)

suppressWarnings(plot(mod_aggregate, type = "states"))

We can then proceed with prediction as well as simulation, both of which allow for the outputs to be aggregated. Alternatively, since we now have an aggregated model object, we can also predict or simulate directly from that object.

newxreg <- xts(matrix(rnorm(24 * 5), nrow = 24, ncol = 5),

future_dates(tail(index(y),1),"months",24))

p <- predict(mod, newxreg = newxreg)

p_agg_series <- tsaggregate(p, weights = weights)

p_agg_model <- predict(mod_aggregate, newxreg = newxreg)

plot(p_agg_series$distribution, gradient_color = "whitesmoke", interval_color = "grey",

main = "Agggregated Model")

plot(p_agg_model$prediction_table[1]$Predicted[[1]]$distribution,

gradient_color = "whitesmoke", add = TRUE, median_color = 2,

interval_color = "grey10")

As we can observe, the output of the 2 predictions is identical (subject to some randomness from the simulation of the predictive distribution). We do the same for the simulation method:

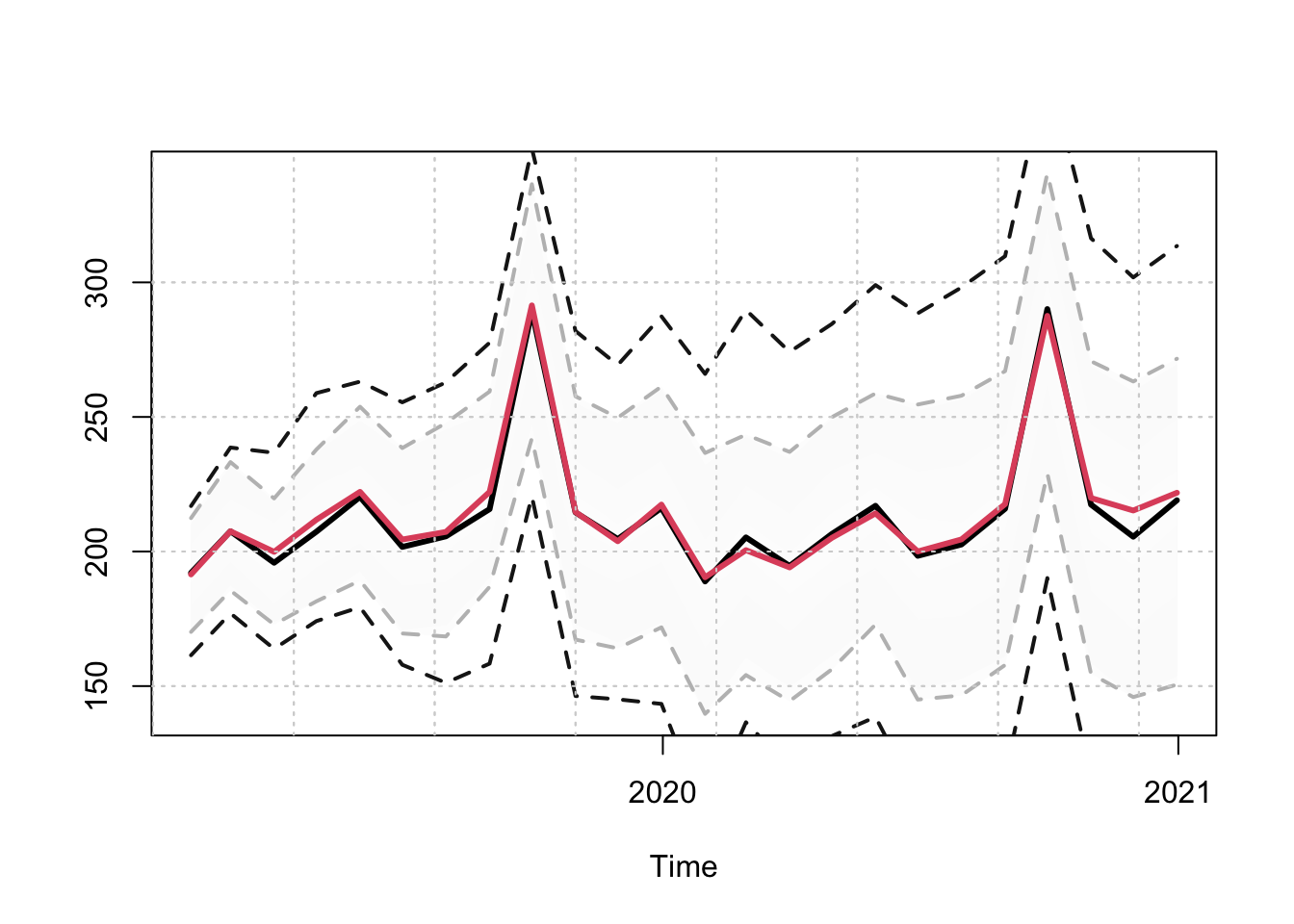

s <- simulate(mod, nsim = 100, h = 24, newxreg = newxreg, seed = 700)

s_agg_series <- tsaggregate(s, weights = weights)

s_agg_model <- simulate(mod_aggregate, nsim = 100, h = 24, newxreg = newxreg,

seed = 700)

plot(s_agg_series$distribution, gradient_color = "whitesmoke",

interval_color = "grey")

plot(s_agg_model$simulation_table[1]$Simulated[[1]]$distribution,

gradient_color = "whitesmoke", add = TRUE, median_color = 2,

interval_color = "grey10")

6.7.6 Example: Groupwise Pooling

It is also possible to have group wise pooling. We saw above that we could specify

pooling using the number of series times number of regressors matrix,

xreg_include, with values 0, 1 or 2+ (0 = no beta, 1 = individual beta and

2+ = grouped beta). Group pooling can be achieved by setting values of 2+.

For instance 2 series sharing one pooled estimate, and 3 other

series sharing another grouped estimate would have values of

(2,2,3,3,3).

In the following example we have 8 series and 2 regressors, with different combinations of pooling.

xreg <- xts(matrix(rnorm(nrow(y)*2), ncol = 2, nrow = nrow(y)), index(y))

colnames(xreg) <- c("B1","B2")

xreg_include <- matrix(1, ncol = 2, nrow = ncol(y))

rownames(xreg_include) <- colnames(y)

colnames(xreg_include) <- colnames(xreg)

# individual [1,1], grouped 2:3, 5:6 and 7:8

xreg_include[c(2:3),1] <- 2

xreg_include[c(5:6),1] <- 3

xreg_include[c(7:8),1] <- 4

# individual 1, zero for 2:3, group for 4:8

xreg_include[c(2:3),2] <- 0

xreg_include[c(4:8),2] <- 3

xreg_include## B1 B2

## ACF.NEWS 1 1

## NSW.NEWS 2 0

## NT.NEWS 2 0

## Q.NEWS 1 3

## SA.NEWS 3 3

## T.NEWS 3 3

## V.NEWS 4 3

## WA.NEWS 4 3spec <- vets_modelspec(y, level = "grouped", slope = "grouped",

group = c(1,1,2,2,3,3,4,4), damped = "none",

seasonal = "grouped", xreg = xreg,

xreg_include = xreg_include, lambda = 0,

dependence = "diagonal", frequency = 12)

mod <- estimate(spec, solver = "nlminb")Note that the Box Cox parameter lambda should be the same for the grouped

series else the coefficients should not be pooled (as they have different scales).

6.7.7 Example: Price Volume Aggregation

This final example shows how to jointly model a series based on price-volume relationship with a target of generating a model for Sales: \(Sales = Price\times Volume\)

While this is a multiplicative model, if we were to model the series using a log transformation we would be able to aggregate the model in logs before exponentiating to recover the Sales as the example will demonstrate.

data("priceunits", package = "tsdatasets")

spec <- vets_modelspec(priceunits, level = "common", slope = "common", frequency = 12,

lambda = rep(0,2), dependence = "full")

mod <- estimate(spec, solver = "optim", control = list(trace = 0))

summary(mod)##

## Vector ETS

## -----------------------------------

## Level : common

## Slope : common

## Seasonal : none

## Dependence : full

## No. Series : 2

## No. TimePoints : 100

##

## Parameter Matrices

##

## Level Matrix

## 2 x 2 diagonal matrix of class "ddiMatrix"

## [,1] [,2]

## [1,] 0.3900419 .

## [2,] . 0.3900419

##

## Slope Matrix

## 2 x 2 diagonal matrix of class "ddiMatrix"

## [,1] [,2]

## [1,] 0.09196964 .

## [2,] . 0.09196964

##

## Correlation Matrix

## 2 x 2 Matrix of class "dsyMatrix"

## price units

## price 1.0000000 -0.5939792

## units -0.5939792 1.0000000

##

## Information Criteria

## AIC BIC AICc

## 162.58 182.37 163.02

##

## Accuracy Criteria

## Mean Weighted

## MAPE 0.0508 0.0508

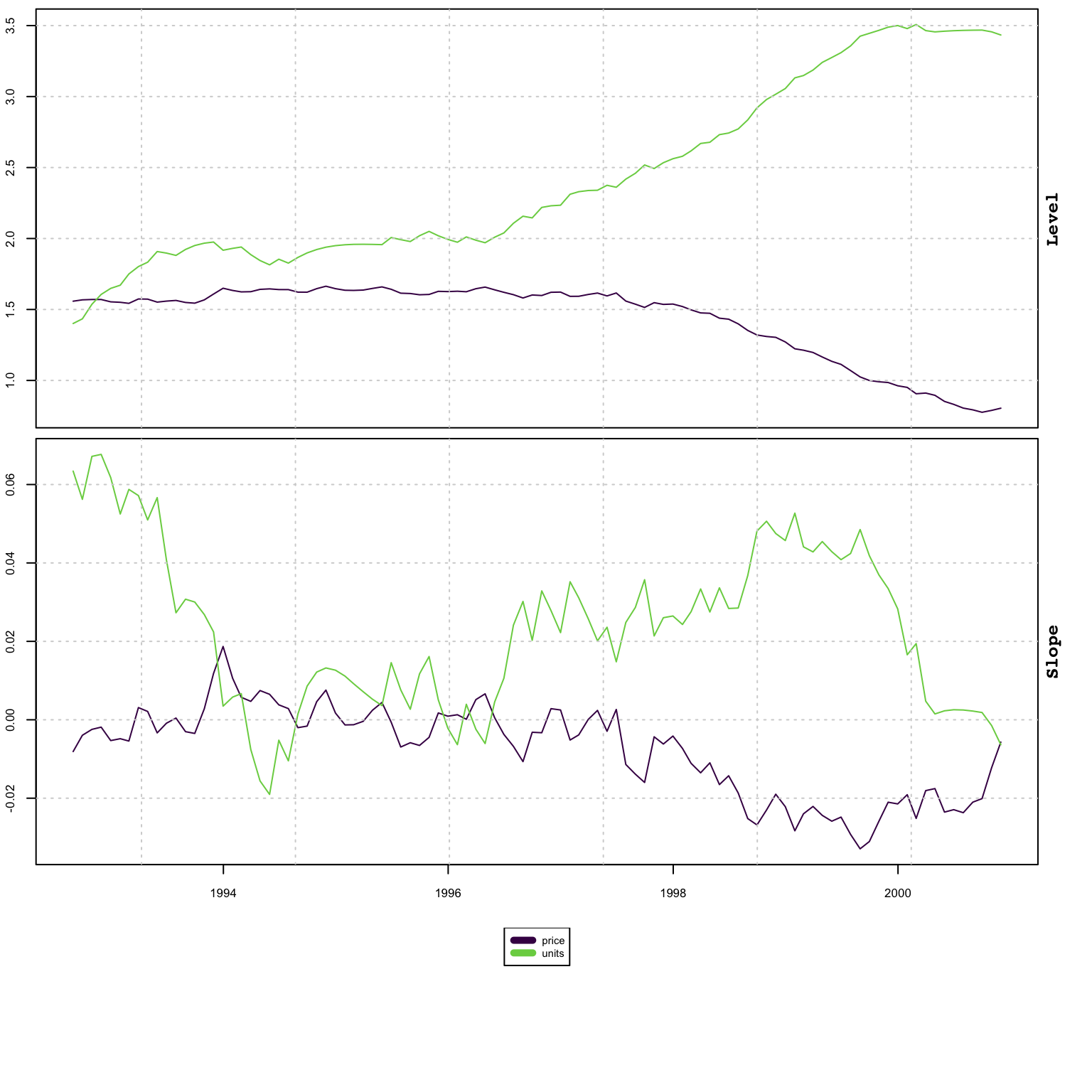

## MSLRE 0.0042 0.0042plot(mod)

plot(mod, type = "states")

weights <- c(1,1)

mod_aggregate <- tsaggregate(mod, weights = weights, return_model = TRUE)

summary(mod_aggregate)##

## Vector ETS

## -----------------------------------

## Level : common

## Slope : common

## Seasonal : none

## Dependence : diagonal

## No. Series : 1

## No. TimePoints : 100

##

## Parameter Matrices

##

## Level Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## [,1]

## [1,] 0.3900419

##

## Slope Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## [,1]

## [1,] 0.09196964

##

## Covariance Matrix

## 1 x 1 diagonal matrix of class "ddiMatrix"

## aggregate

## aggregate 0.003901452

##

## Accuracy Criteria

## Mean Weighted

## MAPE 0.0496 0.0496

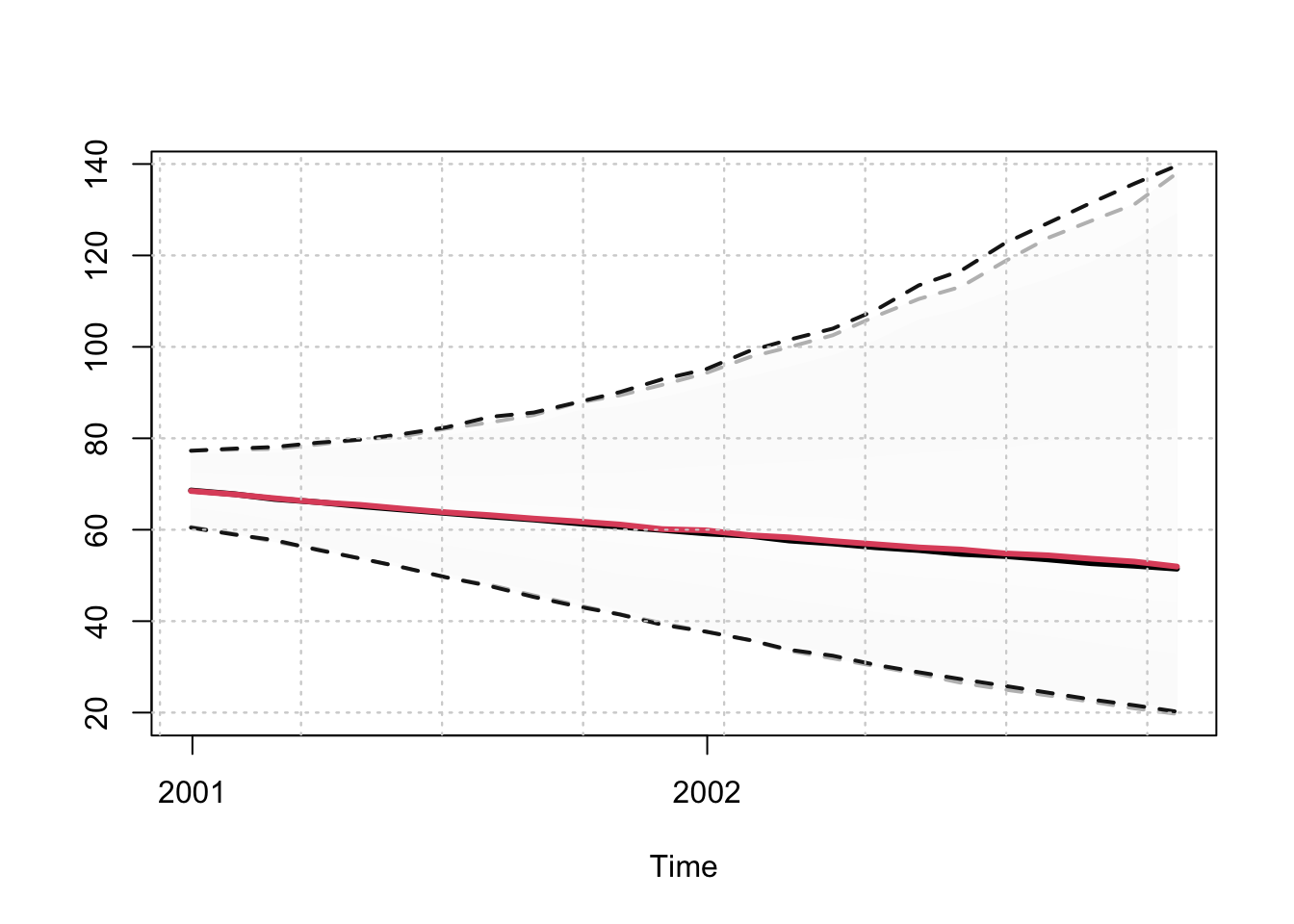

## MSLRE 0.0039 0.0039p <- predict(mod, h = 24, nsim = 5000)

p_agg_model <- predict(mod_aggregate, h = 24, nsim = 5000)

p_agg_series <- tsaggregate(p, weights = weights)

plot(p_agg_model$prediction_table[1]$Predicted[[1]]$distribution,

gradient_color = "whitesmoke", interval_color = "grey")

plot(p_agg_series$distribution, gradient_color = "whitesmoke", add = TRUE,

median_color = 2, interval_color = "grey10")

In the presence of a non-homogeneous model, or a homogeneous model with Box Cox parameter not equal to either 0 (log) or 1 (no transform), then the tsaggregate method will still work but the return_model arguments needs to be set to FALSE and the resulting output will just be the aggregated series.