Chapter 5 tsissm package

5.1 Introduction

The tsissm package implements the linear (and homoscedastic) innovations state space model described in De Livera, Hyndman, and Snyder (2011) and originally proposed by Anderson and Moore (2012). It is in some ways similar to the ETS based models described in R. Hyndman et al. (2008), but with the flexibility of incorporating multiple seasonality, ARMA terms and joint maximization of the Box Cox variance stabilizing lambda parameter.

The following is taken from De Livera, Hyndman, and Snyder (2011). Consider the following SEM model:

\[\begin{equation} \begin{array}{l} y_t^\lambda = {\bf{w'}}{{\bf{x}}_{t - 1}} + \bf{c'}\bf{u}_{t - 1} + {\varepsilon _t}, \quad \varepsilon_t\sim N\left(0,\sigma^2\right),\\ {{\bf{x}}_t} = {\bf{F}}{{\bf{x}}_{t - 1}} + {\bf{g}}{\varepsilon _t}, \end{array} \tag{5.1} \end{equation}\]

where \(\lambda\) represents the Box Cox parameter, \(\bf{w}\) the observation coefficient vector, \(\bf{x}_t\) the unobserved state vector, \(\bf{c}\) a vector of coefficients on the external regressor set \(\bf{u}\).

Define the state vector10 as:

\[\begin{equation} \bf{x}_t = {\left( {{l_t},{b_t},s_t^{(1)},\dots,s_t^{(T)},{d_t},{d_{t - 1}},\dots,{d_{t - p - 1}},{\varepsilon_t},{\varepsilon_{t - 1}},\dots,{\varepsilon _{t - q - 1}}} \right)^\prime }, \tag{5.2} \end{equation}\]

where \(\bf{s}_t^{(i)}\) is the row vector \(\left( {s_{1,t}^{(i)},s_{2,t}^{(i)},\dots,s_{{k_i},t}^{(i)},s_{1,t}^{*(i)},s_{2,t}^{*(i)},\dots,s_{{k_i},t}^{*(i)}} \right)\) in the case of trigonometric seasonality, and \(\left( {s_t^{(i)},s_{t - 1}^{(i)},\dots,s_{t - ({m_i} - 1)}^{(i)}} \right)\) in the case of regular seasonality. Also define \(\bf{1}_r\) and \(\bf{0}_r\) as a vector of ones and zeros, respectively, of length \(r\), \(\bf{O}_{u,v}\) a \(u\times v\) matrix of zeros and \(\bf{I}_{u,v}\) a \(u\times v\) diagonal matrix of ones; let \(\mathbf{\gamma} = \left(\mathbf{\gamma}^{(1)},\dots,\mathbf{\gamma}^{(T)} \right)\) be a vector of seasonal parameters with \({\gamma ^{(i)}} = \left( {\gamma _1^{(i)}{{\bf{1}}_{{k_i}}},\gamma _2^{(i)}{{\bf{1}}_{{k_i}}}} \right)\) in the trigonometric seasonality case (with \(k\) harmonics), and \({\gamma ^{(i)}} = \left( {{\gamma _i},{{\bf{0}}_{{m_i} - 1}}} \right)\) in the regular seasonality case; define \(\theta = \left( {{\theta_1},{\theta_2},\dots,{\theta _p}} \right)\) and \(\psi = \left( {{\psi _1},{\psi _2},\dots,{\psi_q}} \right)\) as the vector of AR(p) and MA(q) parameters, respectively. Define the observation transition vector \({\bf{w}} = {\left( {1,\phi ,{\bf{a}},\theta ,\psi } \right)^\prime }\), where \({\bf{a}} = \left( {{{\bf{a}}^{(1)}},\dots,{{\bf{a}}^{(T)}}} \right)\) with \({{\bf{a}}^{(i)}} = \left( {{{\bf{1}}_{{k_i}}},{{\bf{0}}_{{k_i}}}} \right)\) for the trigonometric case and \({{\bf{a}}^{(i)}} = \left( {{{\bf{0}}_{{m_i} - 1}},1} \right)\) for the regular seasonality case. Define the state error adjustment vector \({\bf{g}} = {\left( {\alpha ,\beta ,\gamma ,1,{{\bf{0}}_{p - 1}},1,{{\bf{0}}_{q - 1}}} \right)^\prime }\). Further, let \({\bf{B}} = \gamma '\theta\), \({\bf{C}} = \gamma '\psi\) and \({\bf{A}} = \oplus _{i = 1}^T{{\bf{A}}_i}\), with

\[\begin{equation} {{\bf{A}}_i} = \left[ {\begin{array}{*{20}{c}} {{{\bf{C}}^{(i)}}}&{{{\bf{S}}^{(i)}}}\\ { - {{\bf{S}}^{(i)}}}&{{{\bf{C}}^{(i)}}} \end{array}} \right], \tag{5.3} \end{equation}\]

for the trigonometric case and with \(\bf{C}^{(i)}\) and \(\bf{S}^{(i)}\) representing the \(k_i\times k_i\) diagonal matrices with elements \(cos(\lambda_j^{(i)})\) and \(sin(\lambda_j^{(i)})\) respectively,11 and

\[\begin{equation} {{\bf{A}}_i} = \left[ {\begin{array}{*{20}{c}} {{{\bf{0}}_{{m_i} - 1}}}&1\\ {{{\bf{I}}_{{m_i} - 1}}}&{{{{\bf{0'}}}_{{m_i} - 1}}} \end{array}} \right], \tag{5.4} \end{equation}\]

for the regular seasonality case, with \(\oplus\) being the direct sum of matrices operator. Finally, the state transition matrix \(\bf{F}\) is composed as follows:

\[\begin{equation} {\bf{F}} = \left[ {\begin{array}{*{20}{c}} 1&\phi &{{{\bf{0}}_\tau }}&{\alpha \theta }&{\alpha \psi }\\ 0&\phi &{{{\bf{0}}_\tau }}&{\beta \theta }&{\beta \psi }\\ {{{{\bf{0'}}}_\tau }}&{{{{\bf{0'}}}_\tau }}&{\bf{A}}&{\bf{B}}&{\bf{C}}\\ 0&0&{{{\bf{0}}_\tau }}&\theta &\psi \\ {{{{\bf{0'}}}_{p - 1}}}&{{{{\bf{0'}}}_{p - 1}}}&{{{\bf{O}}_{p - 1,\tau }}}&{{{\bf{I}}_{p - 1,p}}}&{{{\bf{O}}_{p - 1,q}}}\\ 0&0&{{{\bf{0}}_\tau }}&{{{\bf{0}}_p}}&{{{\bf{0}}_q}}\\ {{{{\bf{0'}}}_{q - 1}}}&{{{{\bf{0'}}}_{q - 1}}}&{{{\bf{O}}_{q - 1,\tau }}}&{{{\bf{O}}_{q - 1,p}}}&{{{\bf{I}}_{q - 1,q}}} \end{array}} \right] \tag{5.5} \end{equation}\]

where \(\tau = 2\sum\limits_{i = 1}^T {{k_i}}\) for the trigonometric case and \(\tau = \sum\limits_{i = 1}^T {{m_i}}\) for the regular seasonality case.

5.2 State Initialization

A key innovation of the De Livera, Hyndman, and Snyder (2011) paper is providing the exact initialization of the non-stationary component’s seed states, the exponential smoothing analogue of the De Jong et al. (1991) method for augmenting the Kalman filter to handle seed states with infinite variances. The proof, based on De Livera, Hyndman, and Snyder (2011) and expanded here is as follows:

Let:

\[\begin{equation} {\bf{D}} = {\bf{F}} - {\bf{gw'}}. \tag{5.6} \end{equation}\]

We eliminate \(\varepsilon_t\) in (5.1) to give:

\[\begin{equation} {{\bf{x}}_t} = {\bf{D}}{{\bf{x}}_{t - 1}} + {\bf{g}}{y_t}. \tag{5.7} \end{equation}\]

Next, we proceed by backsolving the equation for the error, given a given value of \(\lambda\):12

\[\begin{equation} \begin{array}{l} {\varepsilon _t} = {y_t} - {\bf{w}}{{{\bf{\hat x}}}_{t - 1}},\\ {\varepsilon _t} = {y_t} - {\bf{w'}}\left( {{\bf{D}}{{{\bf{\hat x}}}_{t - 2}} + {\bf{g}}{y_{t - 1}}} \right). \end{array} \tag{5.8} \end{equation}\]

Starting with \(t = 4\) and working backwards:

\[\begin{equation} \begin{array}{l} {\varepsilon _4} &= {y_4} - {\bf{w'}}\left( {{\bf{D}}{{{\bf{\hat x}}}_2} + {\bf{g}}{y_3}} \right)\\ &= {y_4} - {\bf{w'}}\left( {{\bf{D}}\left( {{\bf{D}}{{{\bf{\hat x}}}_1} + {\bf{g}}{y_2}} \right) + {\bf{g}}{y_3}} \right)\\ &= {y_4} - {\bf{w'}}\left( {{\bf{D}}\left( {{\bf{D}}\left( {{\bf{D}}{{{\bf{\hat x}}}_0} + {\bf{g}}{y_1}} \right) + {\bf{g}}{y_2}} \right) + {\bf{g}}{y_3}} \right)\\ &= {y_4} - {\bf{w'}}\left( {{\bf{D}}\left( {{{\bf{D}}^2}{{\bf{x}}_0} + {\bf{Dg}}{y_1} + {\bf{g}}{y_2}} \right) + {\bf{g}}{y_3}} \right)\\ &= {y_4} - {\bf{w'}}\left( {{{\bf{D}}^3}{{\bf{x}}_0} + {{\bf{D}}^2}{\bf{g}}{y_1} + {\bf{Dg}}{y_2} + {\bf{g}}{y_3}} \right)\\ &= {y_4} - {\bf{w'}}\sum\limits_{j = 1}^3 {{{\bf{D}}^{j - 1}}{\bf{g}}{y_{4 - j}} - {\bf{w'}}{{\bf{D}}^3}{{\bf{x}}_0}} \\ \end{array} \tag{5.9} \end{equation}\]

and generalizing to \(\varepsilon_t\):

\[\begin{equation} \begin{array}{l} {\varepsilon _t} &= {y_t} - {\bf{w'}}\left( {\sum\limits_{j = 1}^{t - 1} {{{\bf{D}}^{j - 1}}{\bf{g}}{y_{t - j}}} } \right) - {\bf{w'}}{{\bf{D}}^{t - 1}}{{\bf{x}}_0}\\ &= {y_t} - {\bf{w'}}{{{\bf{\tilde x}}}_{t - 1}} - {{{\bf{w'}}}_{t - 1}}{{\bf{x}}_0}\\ &= {{\tilde y}_t} - {{{\bf{w'}}}_{t - 1}}{{\bf{x}}_0}, \end{array} \tag{5.10} \end{equation}\]

where \({{\tilde y}_t} = {y_t} - {\bf{w'}}{{{\bf{\tilde x}}}_{t - 1}}\), \({{{\bf{\tilde x}}}_t} = {\bf{D}}{{{\bf{\tilde x}}}_{t - 1}} + {\bf{g}}{y_t}\), \({{{\bf{w'}}}_t} = {\bf{D}}{{{\bf{w'}}}_{t - 1}}\), \({{{\bf{\tilde x}}}_0} = 0\) and \({{{\bf{w'}}}_0} = {\bf{w'}}\), so that \(\bf{x}_0\) are the coefficients from the regression of \(\bf{w}\) on \(\boldsymbol{\varepsilon}\). While this approach bypasses the need to estimate the initial states by augmenting the parameter vector, which could be very costly for multiple seasonality or large seasonal periods, it still requires one full iteration for \(i=1,\dots,t\) to calculate \(\boldsymbol{\varepsilon}\) and \(\bf{w}\) and then one inversion to get the coefficients for every new set of parameters (i.e. for each new candidate set in the optimization).

5.3 Log-Likelihood

The log-likelihood (\(L\)) of the model follows from the assumption that the innovations \(\varepsilon_t\sim N\left(0, \sigma^2\right)\), leading to the following form for the transformed series \(y^{\lambda}_t\):

\[\begin{equation} L\left( \theta \right) = - \frac{T}{2}\log \left( {2\pi {{\sigma }^2}} \right) - \frac{1}{{2{{\sigma }^2}}}\sum\limits_{t = 1}^T {\varepsilon _t^2 + \left( {\lambda - 1} \right)\sum\limits_{t = 1}^T {\log {y_t}} }. \tag{5.11} \end{equation}\]

Concentrating out \(\sigma^2\) with its maximum likelihood estimate, \({\hat \sigma^2} = {T^{ - 1}}\sum\limits_{t = 1}^T {\varepsilon_t^2}\), eliminating constants and taking the negative for minimization in the optimization routine leads to the following form:

\[\begin{equation} L\left( \theta \right) = T\log \sum\limits_{t = 1}^T {\varepsilon _t^2} - 2\left( {\lambda - 1} \right)\sum\limits_{t = 1}^T {\log {y_t}}, \tag{5.12} \end{equation}\]

where \(\theta\) is the vector of parameters being optimized. The returned value of calling method logLik on an object of class tsissm.estimate is that of equation (5.11).

5.4 Package Implementation

The implementation of the model in the tsissm package differs significantly

from the one provided by R. Hyndman et al. (2022) in the tbats and bats functions, mainly

in terms of a more flexible specification object and more complete methods for

working with the model.

Additionally, in order to ensure that the parameters are within the forecastability region, we constrain the characteristics roots of the matrix \(\bf{D}\) in (5.2), representing the non-stationary components to be inside the unit circle, and also constrain the ARMA roots for stationarity.13

5.5 Demonstration

5.5.1 Specification

The specification function defines the entry point for setting up an issm model:

library(tsissm)

args(issm_modelspec)## function (y, slope = TRUE, slope_damped = FALSE, seasonal = FALSE,

## seasonal_frequency = 1, seasonal_type = c("trigonometric",

## "regular"), seasonal_harmonics = NULL, ar = 0, ma = 0,

## xreg = NULL, transformation = "box-cox", lambda = 1, lower = 0,

## upper = 1, sampling = NULL, ...)

## NULLThe specification has options for slope, dampening, seasonality (trigonometric and regular),14 AR and MA terms and external regressors. Additionally, lambda can be fixed or

estimated (by setting lambda = NA). Automatic selection model selection and ranking based on AIC

is available via the auto_issm function.

5.5.2 Estimation

We showcase the functionality of the package using the electricload dataset from the tsdatasets package which represents total hourly electricity load in Greece in MW as published on ENTSO-E Transparency Platform, for the period 2016-10-17 to 2019-04-30. The series appears to have multiple seasonality with periodicity 24, \(24\times 7\) and \(24\times 7 \times 52\), which we model with trigonometric terms.

library(tsissm)

data("electricload", package = "tsdatasets")

# specification

spec <- issm_modelspec(electricload, slope = T, seasonal = TRUE,

seasonal_frequency = c(24, 4500),

seasonal_type = "trigonometric",

seasonal_harmonics = c(6, 6), ar = 2, ma = 2,

lambda = 0.25)

# estimation

mod <- suppressMessages(estimate(spec, solver = "nlminb"))

mod$opt$elapsed## Time difference of 2.284488 minssummary(mod)## ISSM Model: AAA(24{6}/4500{6})+ARMA(2,2)

##

## Parameter Est[Value] Lower Upper Std. Error t value Pr(>|t|)

## ------------ ----------- ------ ------ ----------- --------- ---------

## alpha 0.2961 0.00 0.99 0.1381 2.1436 0.0321

## beta 0.0000 0.00 0.99 NaN NaN NaN

## gamma24.1 0.0345 -0.01 0.99 0.0009 38.5761 0.0000

## gamma24.2 0.0147 -0.01 0.99 0.0006 24.9130 0.0000

## gamma4500.1 0.1805 -0.01 0.99 0.0231 7.8308 0.0000

## gamma4500.2 -0.0100 -0.01 0.99 NaN NaN NaN

## theta1 -0.3438 -0.99 0.99 0.0104 -33.1414 0.0000

## theta2 -0.4971 -0.99 0.99 0.0096 -51.5499 0.0000

## psi1 -0.1066 -0.99 0.99 0.0072 -14.7263 0.0000

## psi2 0.7020 -0.99 0.99 0.0066 105.7564 0.0000

## lambda 0.2500 0.25 0.25 NaN NaN NaN

##

## tsissm: Performance Metrics

## ----------------------------------

## AIC : 437349.96 (n = 40)

## MAPE : 0.01662

## BIAS : 0.00023

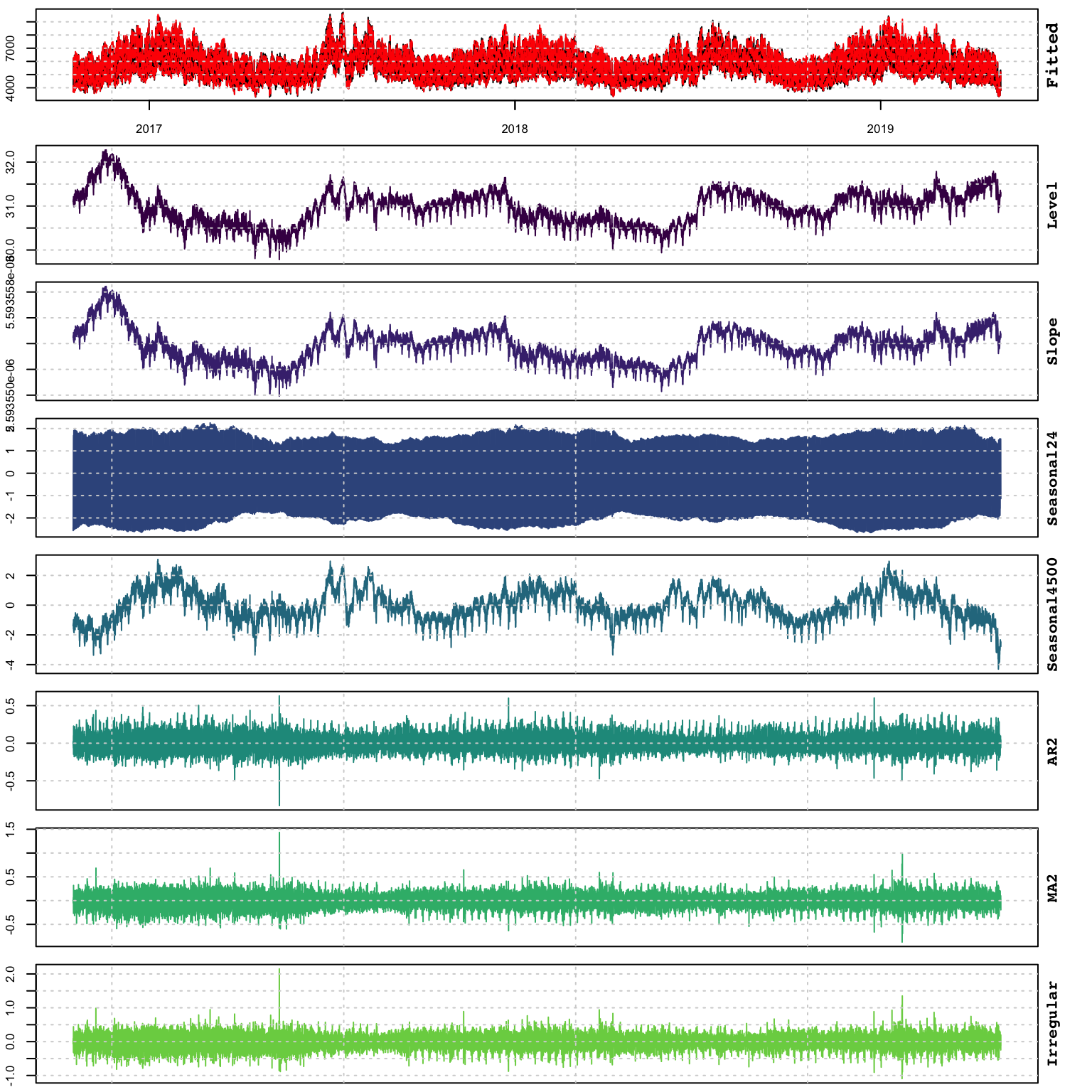

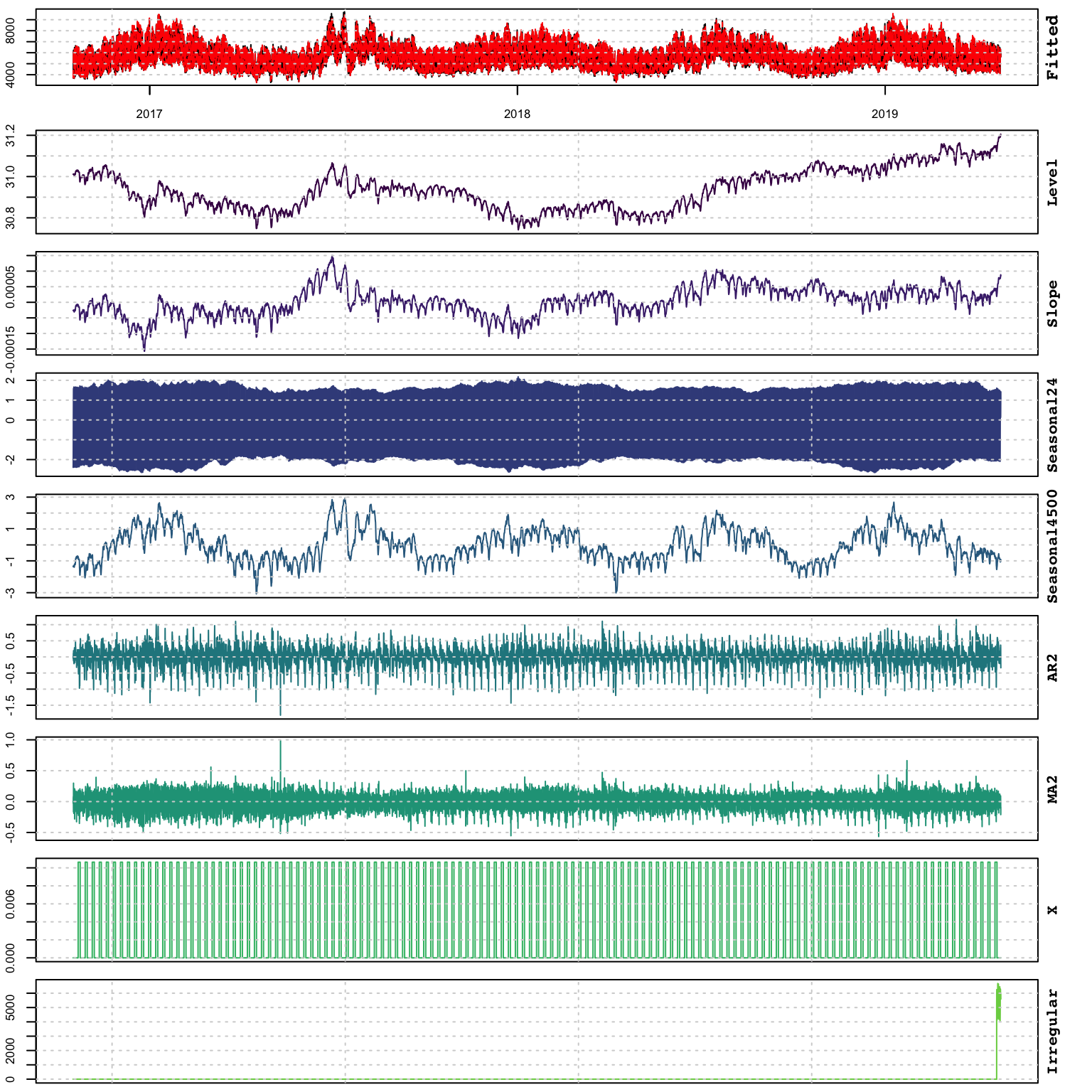

## MSLRE : 0.00048The plot method decomposes the estimated model into it’s components:

plot(mod)

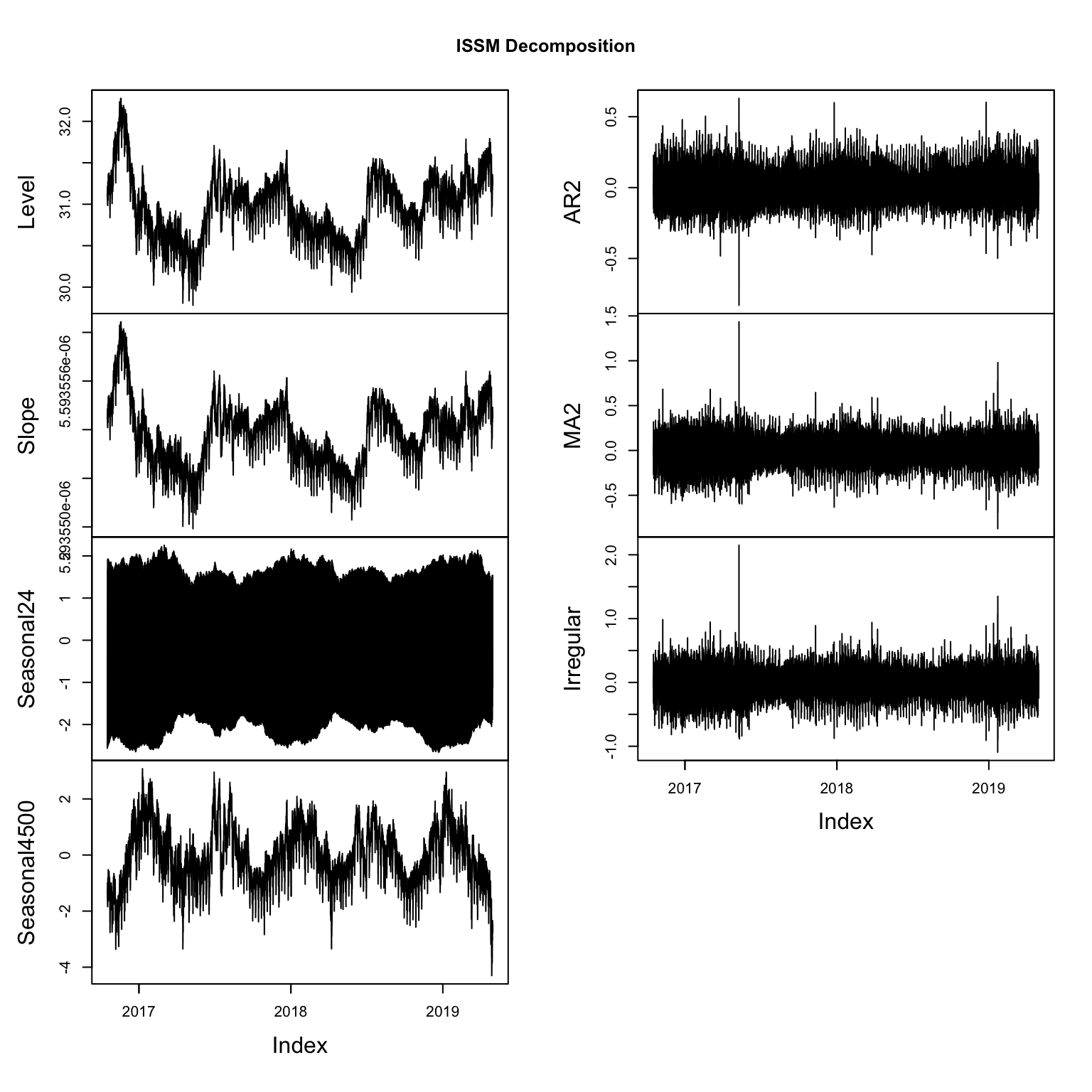

but we can also extract these directly and plot them using the tsdecompose method:

td <- tsdecompose(mod)

plot(as.zoo(td), main = "ISSM Decomposition")

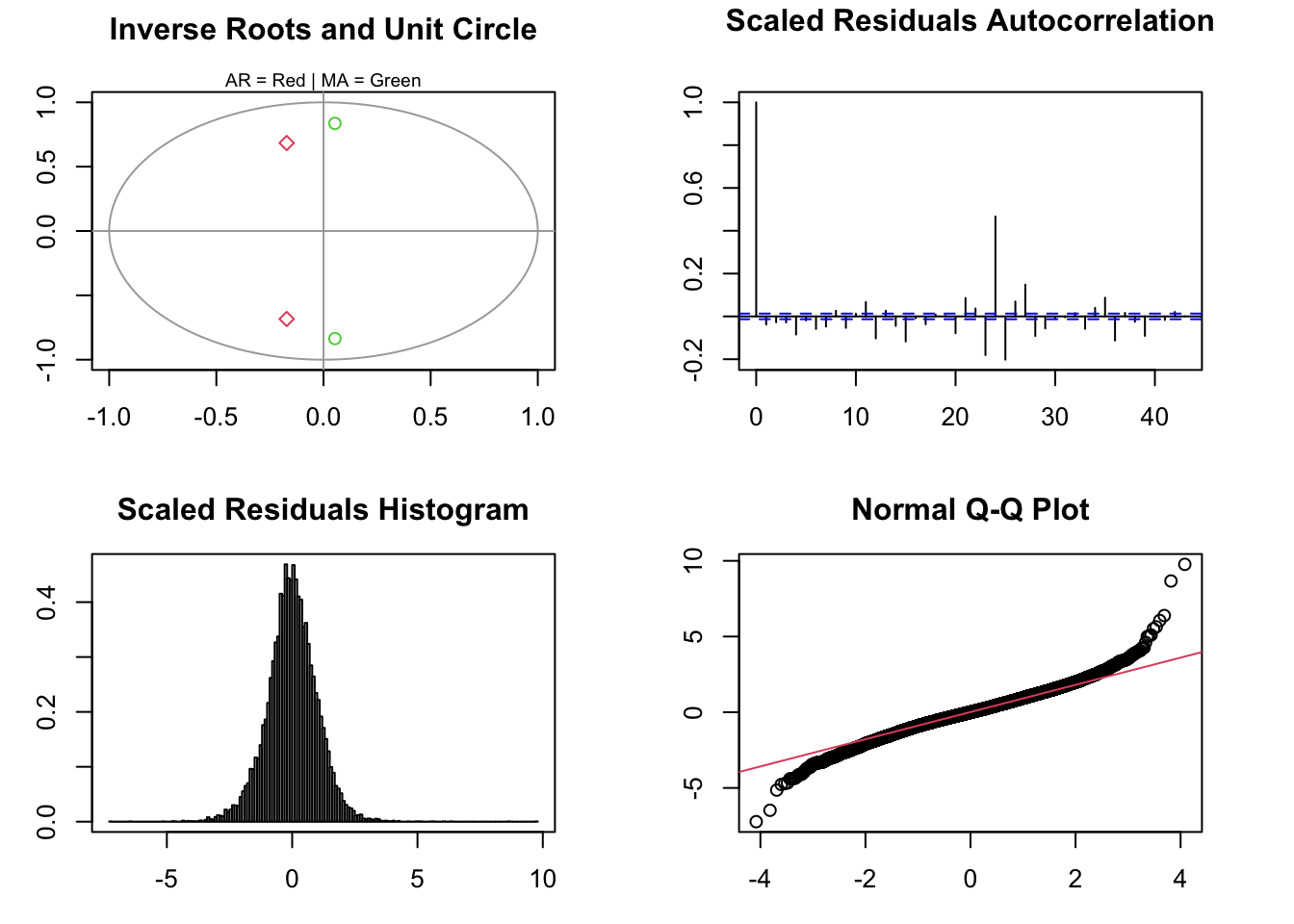

Additional inference methods include diagnostics (tsdiagnose) as well as

standard extractors for the coefficients (coef), log-likelihood (logLik) and AIC (AIC):

tsdiagnose(mod, plot = TRUE)##

## ARMA roots

## ------------------------------------------

## Inverse AR roots: 0.7050694 0.7050694

## Inverse MA roots: 0.8378323 0.8378323

##

## Forecastability (D roots)

## ------------------------------------------

## Real Eigenvalues (D): 1 1 1 1 1 1 1 1 1 1 1 1 1 0.964 0.964 0.862 0.862 0.701 0.701 0.492 0.492 0.248 0.248 0.015 0.015 0.053 0.053 0.496 0 0

##

## Weighted Ljung-Box Test [scaled residuals]

## ------------------------------------------

## Lag statistic pvalue

## <char> <num> <num>

## Lag[1] 31.8 1.7e-08

## Lag[11] 280.3 0.0e+00

## Lag[11] 280.3 0.0e+00

## Lag[11] 280.3 0.0e+00

##

## Outlier Diagnostics (based on Rosner Test)

## ------------------------------------------

## Outliers: 2017-05-10 19:00:00 2019-01-22 14:00:00 2019-01-22 08:00:00 2019-01-22 17:00:00 2019-01-22 09:00:00 2019-01-22 05:00:00 2019-01-12 07:00:00 2017-03-02 17:00:00 2016-11-29 10:00:00 2016-11-08 17:00:00

coef(mod)## alpha beta gamma24.1 gamma24.2 gamma4500.1

## 2.960931e-01 1.000000e-12 3.447394e-02 1.469827e-02 1.805444e-01

## gamma4500.2 theta1 theta2 psi1 psi2

## -1.000000e-02 -3.437839e-01 -4.971229e-01 -1.066188e-01 7.019629e-01logLik(mod)## 'log Lik.' -138954.3 (df=41)AIC(mod)## [1] 4373505.5.3 Prediction

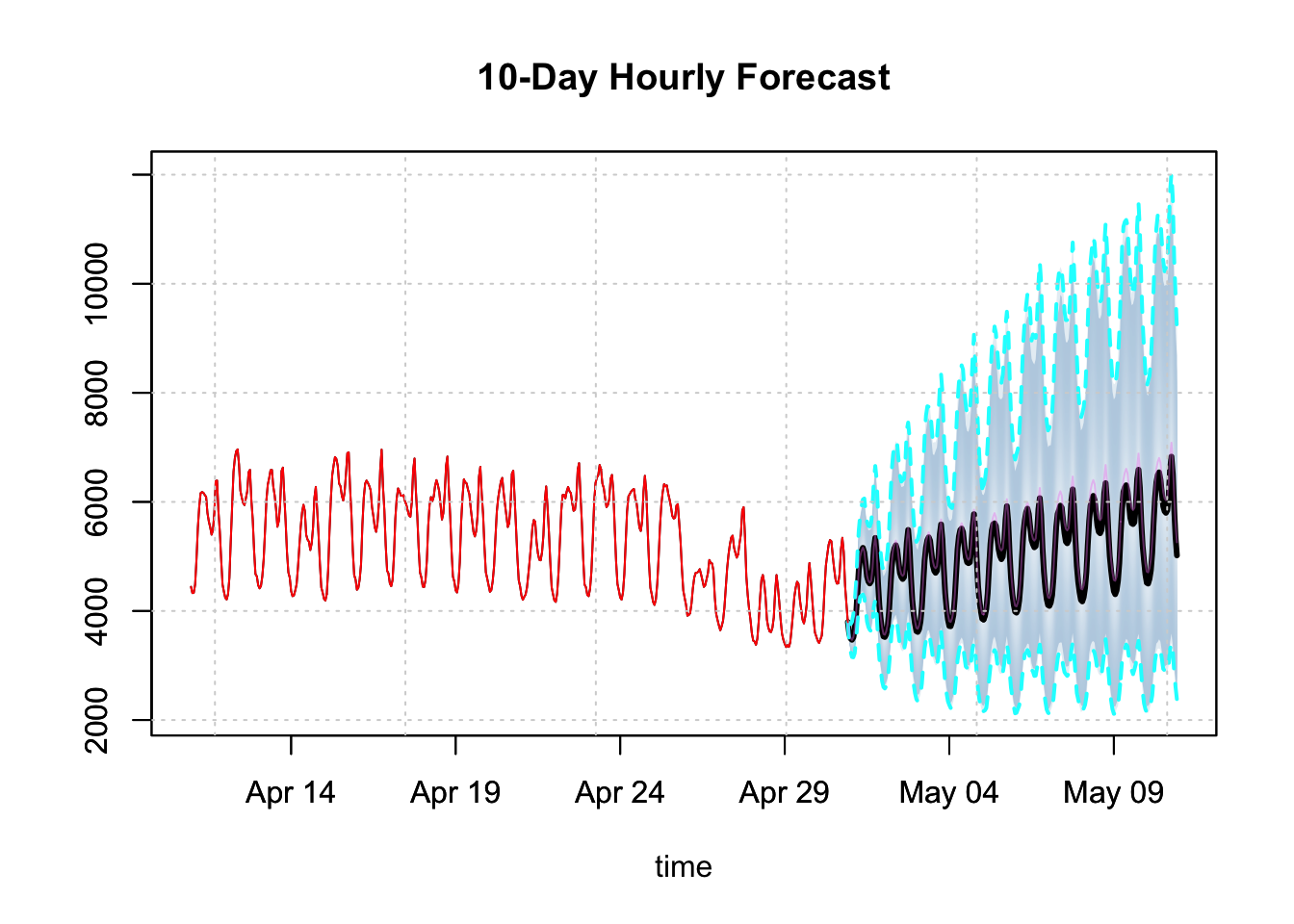

Similar to the other packages in the tsmodels framework, prediction builds a distribution

of possible paths by simulation, outputting an object of class tsmodel.predict:

p <- predict(mod, h = 24*10)

plot(p, n_original = 24*20, main = "10-Day Hourly Forecast")

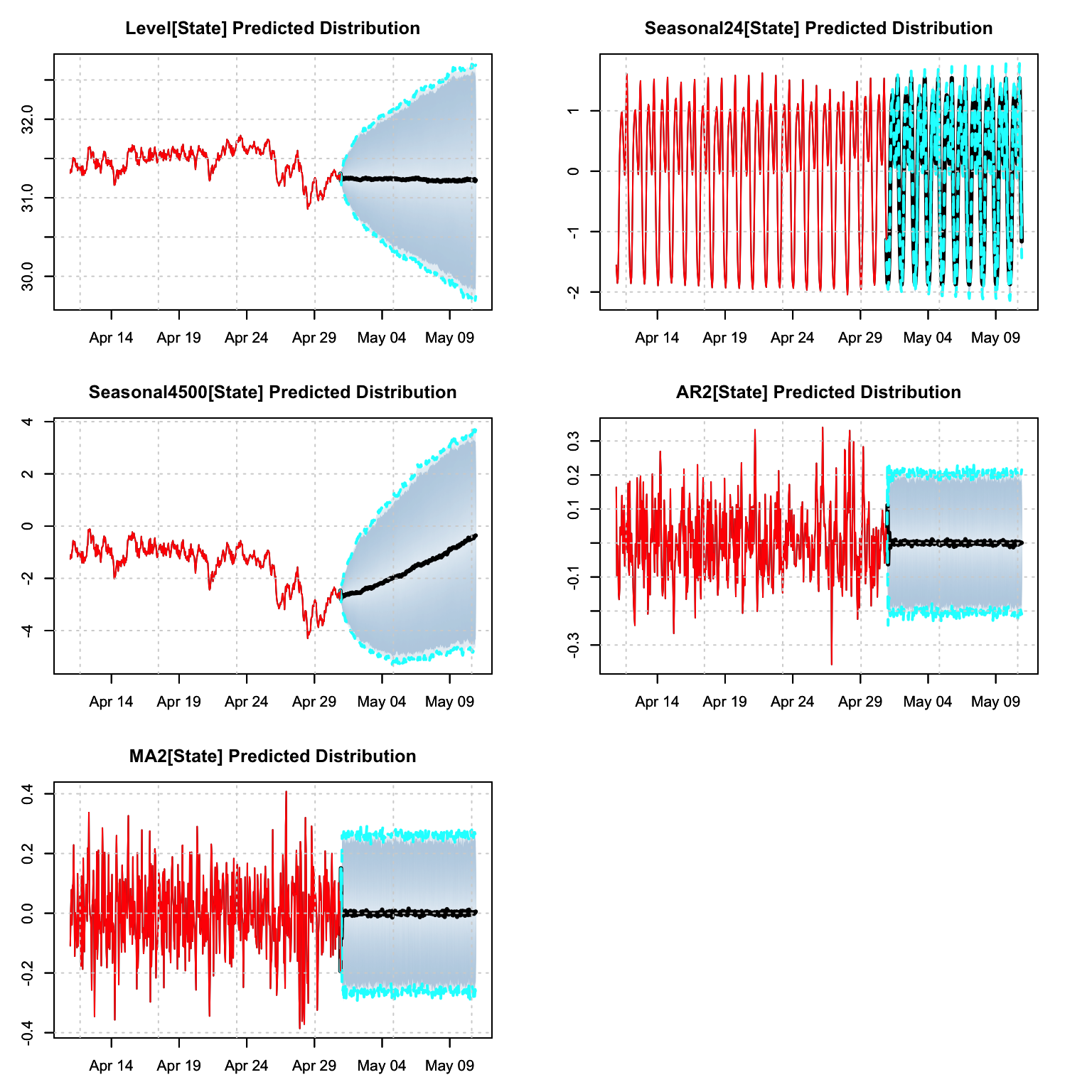

The predict object also has a decomposition method:

td <- tsdecompose(p)

par(mfrow = c(3,2),mar = c(3,3,3,3))

plot(td$Level, n_original = 24*20, main = "Level[State] Predicted Distribution")

plot(td$Seasonal24, n_original = 24*20,

main = "Seasonal24[State] Predicted Distribution")

plot(td$Seasonal4500, n_original = 24*20,

main = "Seasonal4500[State] Predicted Distribution")

plot(td$AR2, n_original = 24*20, main = "AR2[State] Predicted Distribution")

plot(td$MA2, n_original = 24*20, main = "MA2[State] Predicted Distribution")

5.5.4 Simulation

Simulation of an estimated object has options for changing the coefficients as well as the initial states, as well as the option for providing custom innovations or bootstrapped innovations:

args(tsissm:::simulate.tsissm.estimate)## function (object, nsim = 1, seed = NULL, h = NULL, newxreg = NULL,

## sim_dates = NULL, bootstrap = FALSE, innov = NULL, sigma_scale = 1,

## pars = coef(object), init_states = object$spec$xseed, ...)

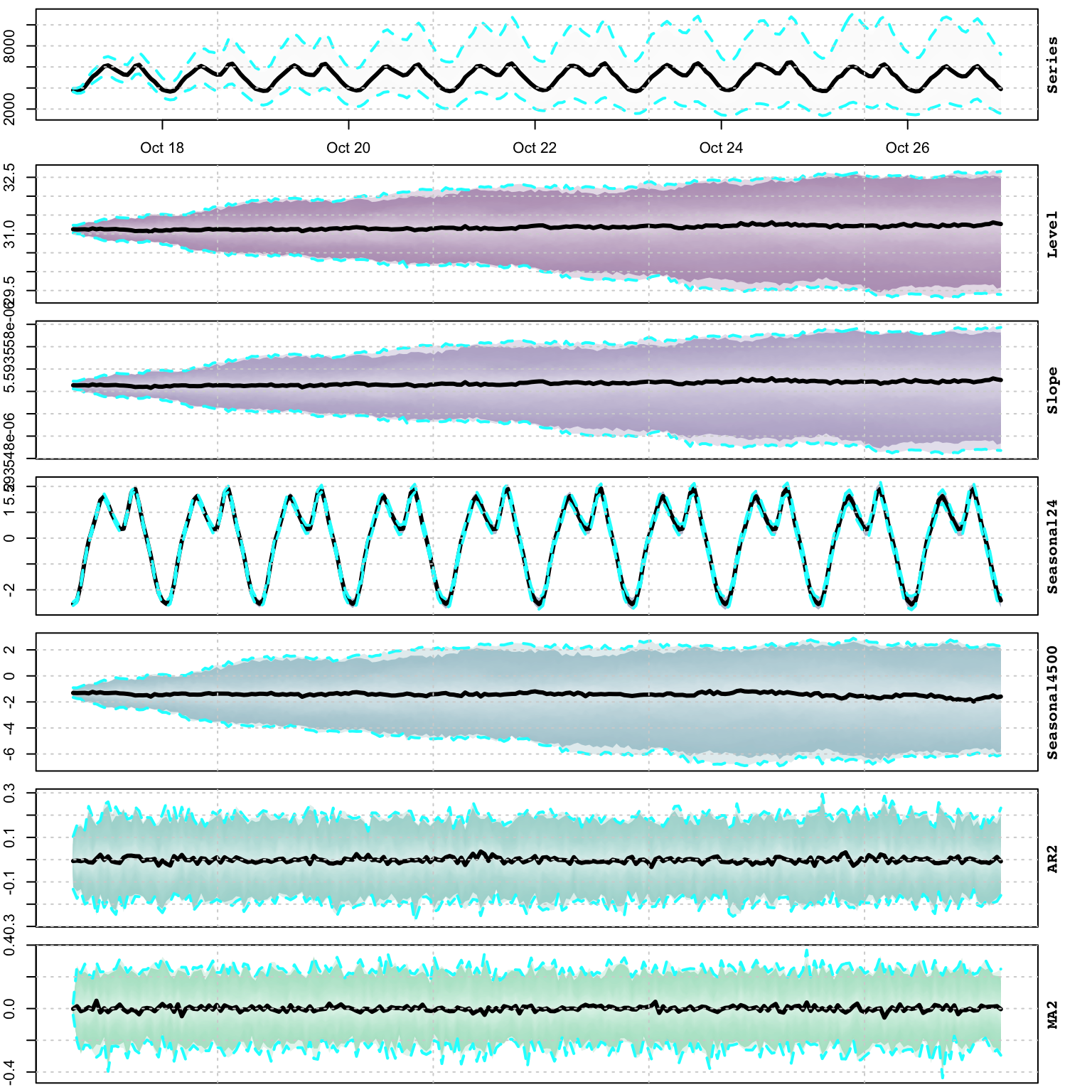

## NULLsim <- simulate(mod, nsim = 100, h = 24 * 10, bootstrap = TRUE)

plot(sim)

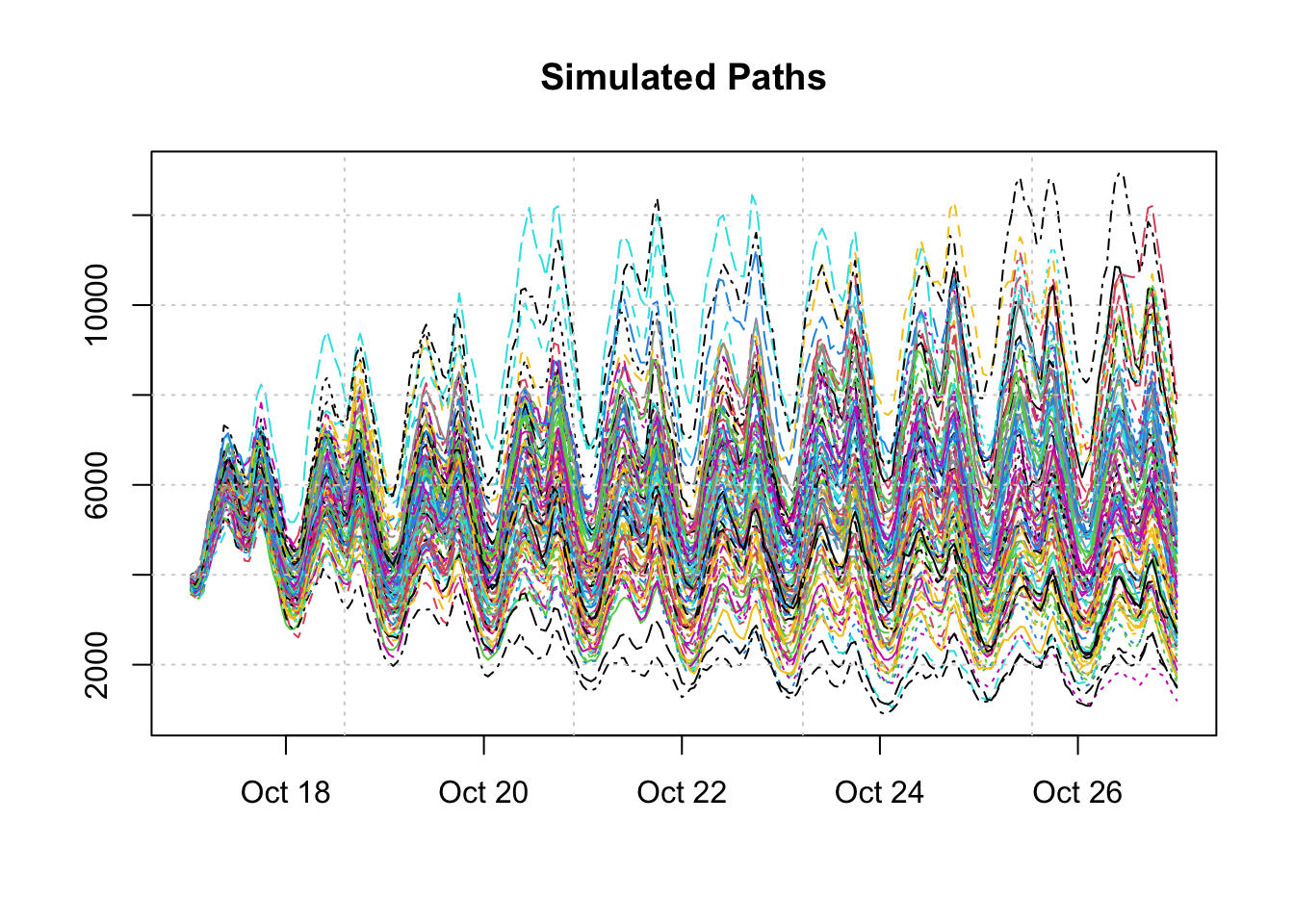

While the plot function on a simulated object provides a decomposition of the actual and state components distributions, it is useful to remind ourselves that the distribution bands represent the range of uncertainty of multiple paths. This is best illustrated by plotting these separately:

matplot(as.POSIXct(colnames(sim$Simulated)), t(sim$Simulated), type = "l",

col = 1:100, ylab = "", xlab = "", main = "Simulated Paths")

grid()

5.5.5 Filtering

Online filtering is when new data arrives and instead of re-estimating the model,

we instead just filter the new data based on an existing model. In the tsissm

package the tsfilter method updates an object of class tsmodel.estimate with

new data as the example below illustrates. Because the class of the model

is retained and only updated (both data and states) with new information,

it is also possible to apply any method to that which admits that object (e.g. predict).

library(tsissm)

suppressMessages(library(xts))

weekends <- xts(matrix(0, ncol = 1, nrow = nrow(electricload)), index(electricload))

weekends[which(weekdays(as.Date(index(weekends))) %in% c("Saturday","Sunday"))] <- 1

colnames(weekends) <- "weekend"

spec <- issm_modelspec(electricload[1:22000], slope = TRUE, seasonal = TRUE,

seasonal_frequency = c(24, 4500), xreg = weekends[1:22000],

seasonal_type = "trigonometric",

seasonal_harmonics = c(6, 6), ar = 2, ma = 2,

lambda = 0.25)

mod <- estimate(spec, solver = "nlminb")

f1 <- tsfilter(mod, y = electricload[22001:22100], newxreg = weekends[22001:22100])

tail(fitted(mod))## [,1]

## 2019-04-21 10:00:00 5602.398

## 2019-04-21 11:00:00 5505.524

## 2019-04-21 12:00:00 5089.799

## 2019-04-21 13:00:00 4911.070

## 2019-04-21 14:00:00 4831.108

## 2019-04-21 15:00:00 5042.946head(tail(fitted(f1), 100),10)## [,1]

## 2019-04-21 16:00:00 5446.961

## 2019-04-21 17:00:00 5795.905

## 2019-04-21 18:00:00 6158.819

## 2019-04-21 19:00:00 5908.761

## 2019-04-21 20:00:00 5284.804

## 2019-04-21 21:00:00 4888.972

## 2019-04-21 22:00:00 4499.164

## 2019-04-21 23:00:00 4216.602

## 2019-04-22 00:00:00 4185.699

## 2019-04-22 01:00:00 4105.256plot(f1)

f2 <- tsfilter(f1, y = electricload[22100:22200], newxreg = weekends[22100:22200])

tail(fitted(f1))## [,1]

## 2019-04-25 14:00:00 5741.715

## 2019-04-25 15:00:00 5759.517

## 2019-04-25 16:00:00 5970.261

## 2019-04-25 17:00:00 6054.623

## 2019-04-25 18:00:00 6082.171

## 2019-04-25 19:00:00 5672.456head(tail(fitted(f2), 100),10)## [,1]

## 2019-04-25 20:00:00 5079.625

## 2019-04-25 21:00:00 4757.516

## 2019-04-25 22:00:00 4393.659

## 2019-04-25 23:00:00 4187.004

## 2019-04-26 00:00:00 4145.853

## 2019-04-26 01:00:00 4059.955

## 2019-04-26 02:00:00 3973.271

## 2019-04-26 03:00:00 4221.322

## 2019-04-26 04:00:00 4393.471

## 2019-04-26 05:00:00 4598.8625.5.6 Profiling

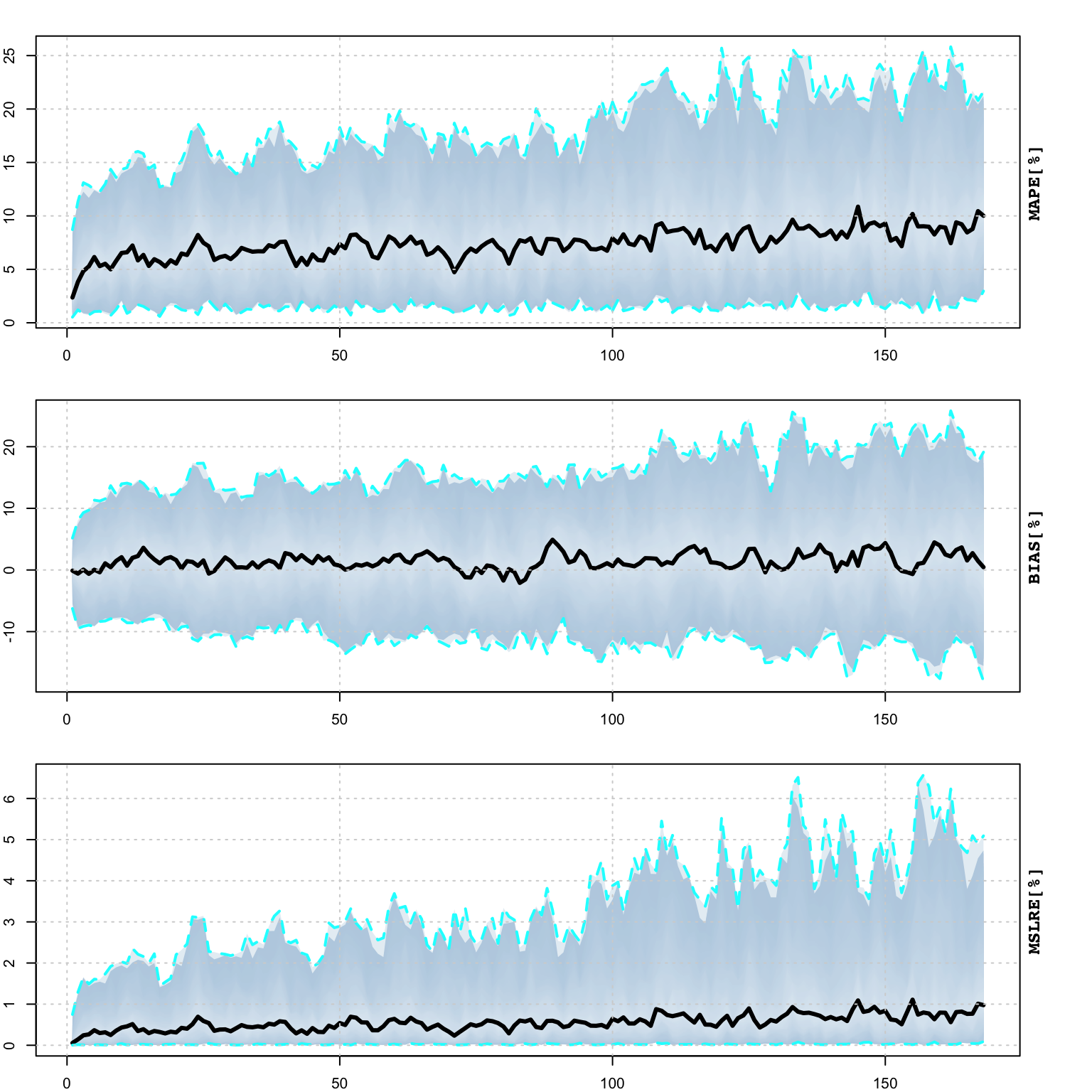

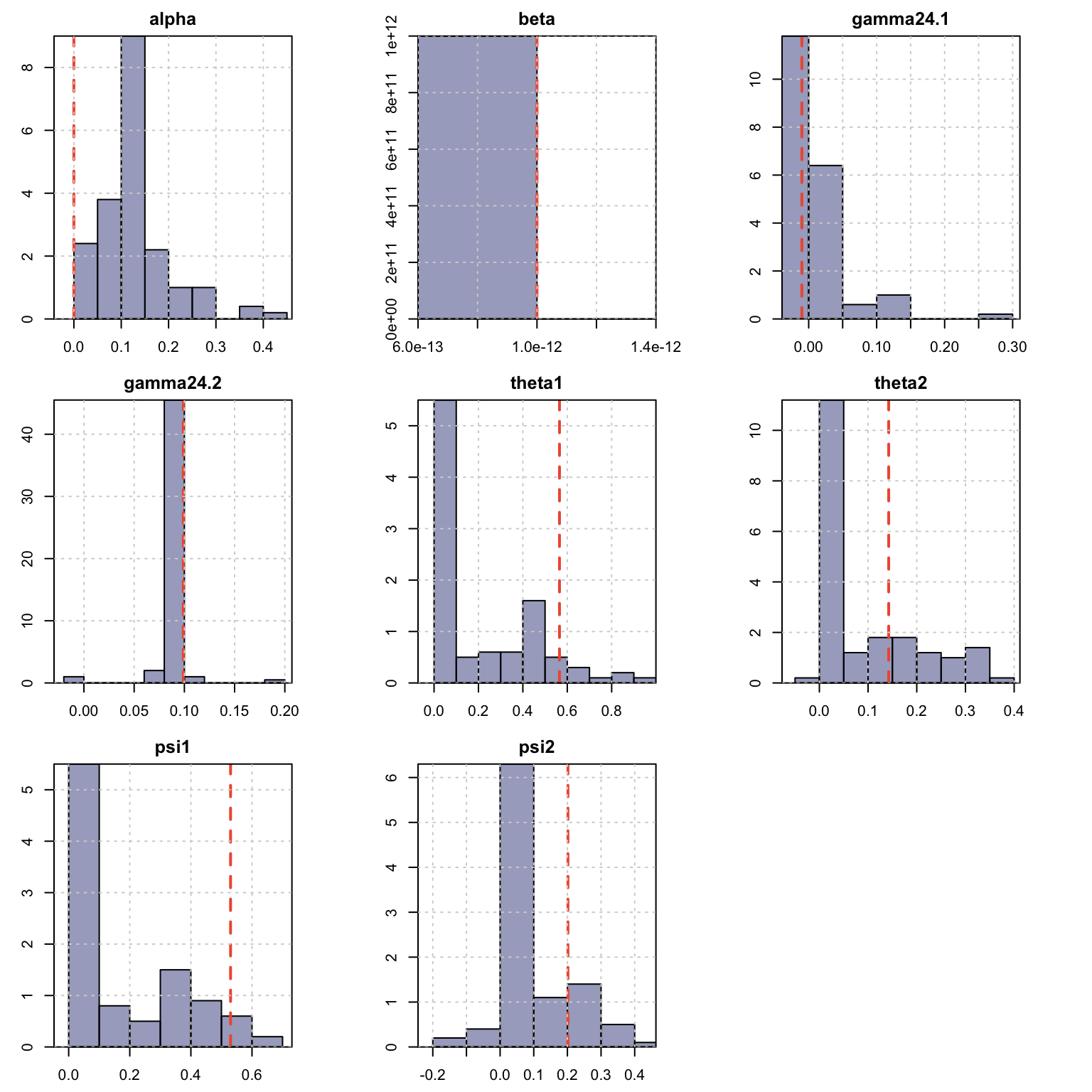

The tsprofile function profiles an estimated model by simulating and then estimating multiple paths from the assumed DGP while leaving h values out for prediction evaluation. Each simulated path is equal to the size of the original dataset plus h additional values, and initialized with the initial state vector from the model. The resulting output contains the distribution of the MAPE, percent bias (BIAS) and mean squared log relative error (MSLRE) per horizon h. Since these matrices are of class tsmodel.distribution they can be readily plotted with the special purpose plot function for this class from the tsmethods package. Additionally, a data.table matrix is also returned with the distribution of the coefficients from each path estimation.

As of version 0.2.0, the tsprofile method on a tsissm.estimate object does not support newxreg.

spec <- issm_modelspec(electricload[1:(24*24)], slope = T, seasonal = TRUE,

seasonal_frequency = c(24),

seasonal_type = "trigonometric",

seasonal_harmonics = c(6), ar = 2, ma = 2,

lambda = 0.25)

mod <- estimate(spec, solver = "nlminb")

prof <- tsprofile(mod, h = 24*7, nsim = 100, solver = "nlminb")## Warning: UNRELIABLE VALUE: Future ('<none>') unexpectedly generated random

## numbers without specifying argument 'seed'. There is a risk that those random

## numbers are not statistically sound and the overall results might be invalid.

## To fix this, specify 'seed=TRUE'. This ensures that proper, parallel-safe random

## numbers are produced via the L'Ecuyer-CMRG method. To disable this check, use

## 'seed=NULL', or set option 'future.rng.onMisuse' to "ignore".plot(prof)

plot(prof, type = "coef")

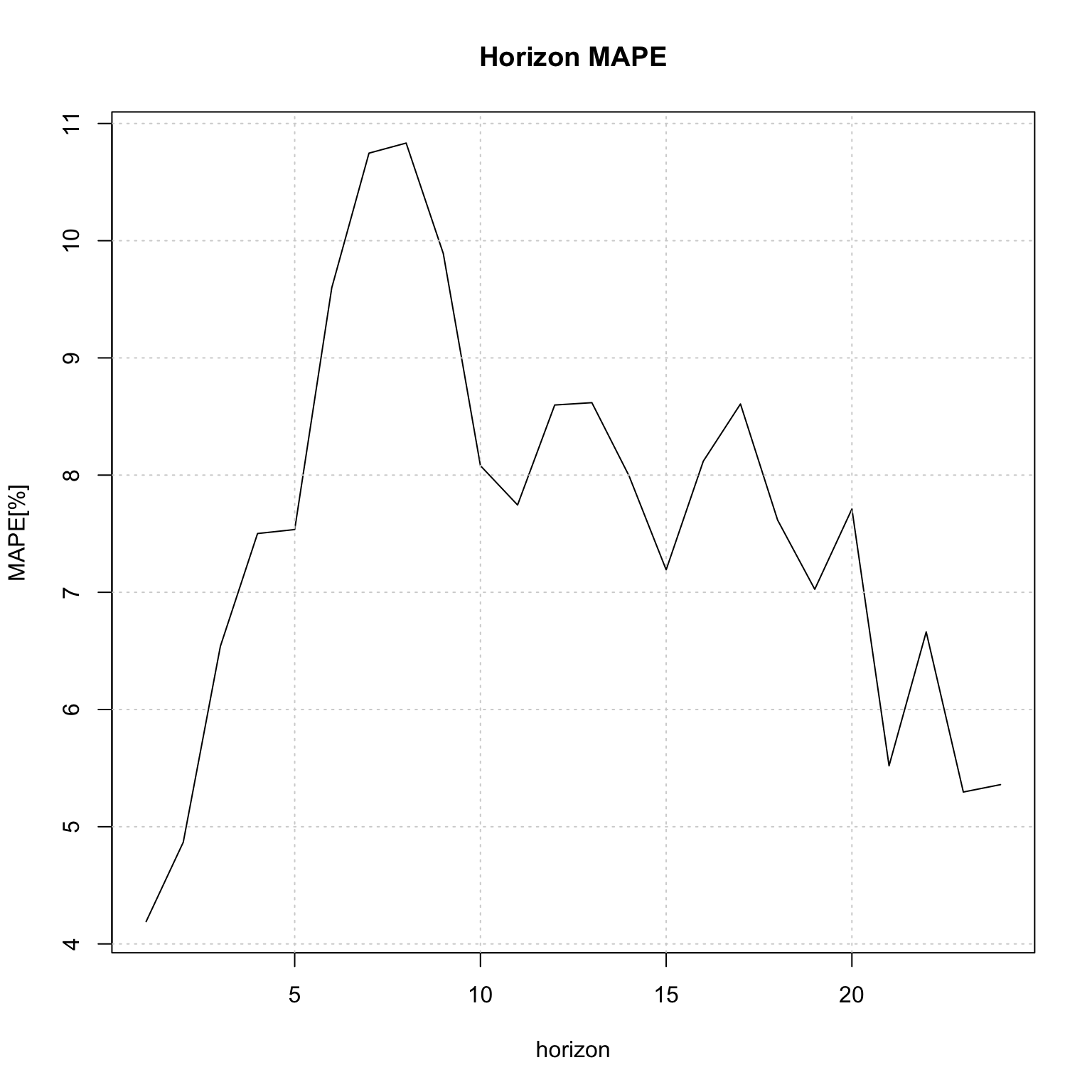

5.5.7 Backtesting

The tsbacktest method generates an expanding window walk forward backtest, returning a list with the estimation/horizon predictions against actuals as well as a table of average performance metrics by horizon. Optionally, instead of re-estimating every one period, the

option to re-estimate every n-periods is available via the estimate_every option.

suppressMessages(library(future))

plan(list(

tweak(sequential),

tweak(multisession, workers = 5)

))

spec <- issm_modelspec(electricload[1:(24*24)], slope = T, seasonal = TRUE,

seasonal_frequency = c(24),

seasonal_type = "trigonometric",

seasonal_harmonics = c(6), ar = 2, ma = 2,

lambda = 0.25)

b <- tsbacktest(spec, start = 24*12, h = 24, estimate_every = 24, alpha = c(0.02, 0.1),

data_name = "electricity", solver = "nlminb", autodiff = TRUE,

trace = FALSE)## Warning: UNRELIABLE VALUE: Future ('<none>') unexpectedly generated random

## numbers without specifying argument 'seed'. There is a risk that those random

## numbers are not statistically sound and the overall results might be invalid.

## To fix this, specify 'seed=TRUE'. This ensures that proper, parallel-safe random

## numbers are produced via the L'Ecuyer-CMRG method. To disable this check, use

## 'seed=NULL', or set option 'future.rng.onMisuse' to "ignore".head(b$metrics)## horizon variable MAPE MSLRE BIAS n MIS[0.02]

## <int> <char> <num> <num> <num> <int> <num>

## 1: 1 y 0.04190210 0.003309235 -0.0236839237 12 5998.228

## 2: 2 y 0.04867266 0.004278126 -0.0137657649 12 6205.653

## 3: 3 y 0.06539931 0.007050628 0.0008472191 12 7406.479

## 4: 4 y 0.07501513 0.008072229 -0.0090672231 12 5777.668

## 5: 5 y 0.07535560 0.009468918 -0.0222899079 12 7845.072

## 6: 6 y 0.09600599 0.013778439 -0.0254790898 12 12528.816

## MIS[0.1]

## <num>

## 1: 1774.305

## 2: 2028.161

## 3: 2361.633

## 4: 2365.149

## 5: 3083.159

## 6: 4400.748plot(b$metrics$horizon, b$metrics$MAPE*100, main = "Horizon MAPE", type = "l",

ylab = "MAPE[%]", xlab = "horizon")

grid()

plan(sequential)The variable n in the table reports the number of h-step ahead predictions made on which the average metrics were calculated.

5.5.8 Benchmarking

The tsbenchmark* method is used to benchmark a model for timing and accuracy and can be used as a unit testing function.

spec <- issm_modelspec(electricload[1:(24*50)], slope = T, seasonal = TRUE,

seasonal_frequency = c(24),

seasonal_type = "trigonometric",

seasonal_harmonics = c(6), ar = 2, ma = 2,

lambda = 0.25)

bench <- tsbenchmark(spec, solver = "nlminb", autodiff = TRUE)

print(bench)## start end spec

## <POSc> <POSc> <list>

## 1: 2022-10-13 15:05:26 2022-10-13 15:05:27 <tsissm.spec[11]>

## estimate solver control loglik

## <list> <char> <list> <num>

## 1: <tsissm.estimate[6]> nlminb 0 -7377.924print(bench$end - bench$start)## Time difference of 0.8560059 secsThe implementation of the constraints for both the \(\bf{D}\) matrix as well as the ARMA roots are non-smooth and hence care should be taken in checking the solution.

References

The following equations apply for the case when all components are present (level, slope, seasonal and ARMA).↩︎

For \(j=1,\dots,k_i\) and \(i=1,\dots,T\), representing the number of harmonics \(k\) per seasonal period \(i\).↩︎

For simplicity of exposition, \(y_t\) is equivalent to \(y_t^{(\lambda)}\).↩︎

So that the inverse of the characteristic roots are inside the unit circle.↩︎

Currently, multiple seasonality is not implemented for the regular case, but will be added in due course.↩︎